The lighty.io RESTCONF-NETCONF (RNC) application allows to easily initialize, start and utilize the most used OpenDaylight services, including clustering and optionally add custom business logic. PANTHEON.tech provides a pre-prepared Helm chart inside the lighty.io RNC application, which can be easily used for Kubernetes deployment.

Clustering is a mechanism that enables multiple processes and programs to work together as one entity.

For example, when you search for something on google.com, it may seem like your search request is processed by only one web server. In reality, your search request is processed by many web servers connected in a cluster. Similarly, you can have multiple instances of OpenDaylight working together as one entity.

The advantages of clustering are:

- Scaling: If you have multiple instances of OpenDaylight running, you can potentially do more work and store more data than you could with only one instance. You can also break up your data into smaller chunks (shards) and either distribute that data across the cluster or perform certain operations on certain members of the cluster.

- High Availability: If you have multiple instances of OpenDaylight running and one of them crashes, you will still have the other instances working and available.

- Data Persistence: You will not lose any data stored in OpenDaylight after a manual restart or a crash.

This article demonstrates, how to configure the lighty.io RNC application to use clustering, rescaling of the cluster and connecting a device to the cluster.

Add the PANTHEON.tech Helm Repository to MicroK8

For deploying the lighty.io RNC application, we will use and show how to install the microk8s Local Kubernetes engine. Feel free to use any other of your favorite local Kubernetes engines, which you have installed.

1. Install microk8s with snap.

sudo snap install microk8s --classic sudo usermod -a -G microk8s $USER sudo chown -f -R $USER ~/.kube

2. Enable the required add-ons.

microk8s enable dns helm3

3. Add the PANTHEON.tech Helm repository to microk8s.

microk8s.helm3 repo add pantheon-helm-repo https://pantheontech.github.io/helm-charts/

4. Update the repository.

microk8s.helm3 repo update

Configuring your lighty.io RNC Clustering app

Before we demonstrate how our cluster functions, we need to properly configure the lighty.io RNC app.

The lighty.io RNC application could be configured through Helm values file. The default RNC app values.yaml file can be found inside the lighty.io GitHub.

1. Set up the configuration using –set flag, to enable clustering, using:

- lighty.replicaCount= 3 // This allows you to configure the size of the cluster.

- lighty.akka.isSingleNode=false // If true, akka configuration would be overwritten with default configuration.

- nodePort.useNodePort=false // To use Clustering service, rather than NodePort service.

- lighty.moduleTimeOut=120 // Cluster takes some time to deploy, set time out to higher value.

*Note: lighty.akka.isSingleNode is required to be set to false, when using clustering.

microk8s.helm3 install lighty-rnc-app pantheon-helm-repo/lighty-rnc-app-helm --version 16.1.0 --set lighty.replicaCount=3,lighty.akka.isSingleNode=false,nodePort.useNodePort=false,lighty.moduleTimeOut=120

To modify a running configuration after deploying the app, just change the “install” to “upgrade”.

1.1 Verify, that the lighty-rnc-app is deployed.

microk8s.helm3 ls

Afterwards, you should see the lighty-rnc-app with status “deployed”:

2. Set up the configuration, using configured values.yaml file.

2.1 Download the values.yaml file from lighty-core.

2.2 Update the image to your desired version, for example:

image: name: ghcr.io/pantheontech/lighty-rnc version: 16.1.0 pullPolicy: IfNotPresent

2.3 Update the values.yaml file using:

- lighty.replicaCount= 3 // This allows you to configure the size of the cluster.

- lighty.akka.isSingleNode=false // If true, akka configuration would be overwritten with default configuration.

- nodePort.useNodePort=false // To use ClusterIp service rather than NodePort service

- lighty.moduleTimeOut=120 // Cluster takes some time to deploy. Set time out to higher value.

Note: lighty.akka.isSingleNode is required to be false when using clustering.

2.4 Deploy the lighty.io RNC app with changed values.yaml file.

microk8s.helm3 install lighty-rnc-app pantheon-helm-repo/lighty-rnc-app-helm --version 16.1.0 --values [VALUES_YAML_FILE]

To modify a running configuration after deploying the app, just change the “install” to “upgrade”.

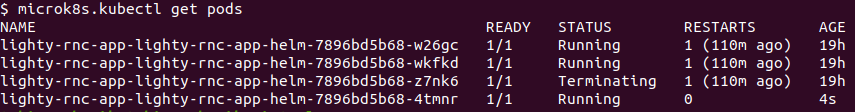

3. Verify that all pods started. You should see as many pods, as you set the value of replicaCount.

microk8s.kubectl get pods

Create a testing device w/ lighty.io NETCONF Simulator

For testing purposes, we will need some devices. The ideal tool in this case is the lighty.io NETCONF Simulator. We will use this device and start it inside a Docker container. A Docker file can be found inside lighty.io, which can create an image for this simulated device.

1. Download the lighty.io NETCONF Simulator Docker file to a separate folder.

2. Create a Docker image from the Docker file.

sudo docker build -t lighty-netconf-simulator .

3. Start the Docker container with a testing device at Port 17830 (or any other port), by changing the -p parameter.

sudo docker run -d --rm --name netconf-simulator -p17830:17830 lighty-netconf-simulator:latest

4. Check the IP address, assigned to the Docker container. This parameter will be used as the DEVICE_IP parameter in requests.

docker inspect -f '{{range.NetworkSettings.Networks}}{{.IPAddress}}{{end}}' netconf-simulator

Clustering in the lighty.io RNC App

As mentioned at the beginning of this tutorial, the key to understanding clustering is to see many of the same instances as one functioning entity.

To demonstrate that our cluster in lighty.io RNC is alive and well, we will demonstrate its “keep alive” function. This shows, that when we manually remove the leader from a cluster, the entire system will not crumble, but hold an election and elect another leader. In practical terms – a cluster will continue to function, even when a leader is terminated/down, by self-sufficiently electing a new one and therefore showing, that it functions as an entity, or cluster.

1. Show IP addresses of all pods.

microk8s.kubectl get pods -l app.kubernetes.io/name=lighty-rnc-app-helm -o custom-columns=":status.podIP"

2. Use one of the IP addresses from the previous step, to view members of the cluster.

curl --request GET 'http://[HOST_IP]:8558/cluster/members/'

Your response should look like this:

{

"leader": "akka://opendaylight-cluster-data@10.1.101.177:2552",

"members": [

{

"node": "akka://opendaylight-cluster-data@10.1.101.177:2552",

"nodeUid": "4687308041747729846",

"roles": [

"member-10.1.101.177",

"dc-default"

],

"status": "Up"

},

{

"node": "akka://opendaylight-cluster-data@10.1.101.178:2552",

"nodeUid": "-29348997399314594",

"roles": [

"member-10.1.101.178",

"dc-default"

],

"status": "Up"

}

],

"oldest": "akka://opendaylight-cluster-data@10.1.101.177:2552",

"oldestPerRole": {

"member-10.1.101.177": "akka://opendaylight-cluster-data@10.1.101.177:2552",

"dc-default": "akka://opendaylight-cluster-data@10.1.101.177:2552",

"member-10.1.101.178": "akka://opendaylight-cluster-data@10.1.101.178:2552"

},

"selfNode": "akka://opendaylight-cluster-data@10.1.101.177:2552",

"unreachable": []

}

In the response, you should see which member was elected as the leader, as well as all other members.

- Tip: Use Postman for better response readability

3. Add the device to one of the members.

curl --request PUT 'http://[HOST_IP]:8888/restconf/data/network-topology:network-topology/topology=topology-netconf/node=new-node' \

--header 'Content-Type: application/json' \

--data-raw '{

"netconf-topology:node": [

{

"node-id": "new-node",

"host": [DEVICE_IP],

"port": 17830,

"username": "admin",

"password": "admin",

"tcp-only": false,

"keepalive-delay": 0

}

]

}

4. Verify that the device was added to all members of the cluster.

curl --request GET 'http://[MEMBER_1_IP]:8888/restconf/data/network-topology:network-topology/topology=topology-netconf/node=new-node' curl --request GET 'http://[MEMBER_2_IP]:8888/restconf/data/network-topology:network-topology/topology=topology-netconf/node=new-node' ...

Every member of the cluster should return the same device, with the same values:

{

"network-topology:node": [

{

"node-id": "new-node",

"netconf-node-topology:connection-status": "connected",

"netconf-node-topology:username": "admin",

"netconf-node-topology:password": "admin",

"netconf-node-topology:available-capabilities": {

"available-capability": [

{

"capability": "urn:ietf:params:netconf:base:1.1",

"capability-origin": "device-advertised"

},

{

"capability": "urn:ietf:params:netconf:capability:notification:1.0",

"capability-origin": "device-advertised"

},

{

"capability": "urn:ietf:params:netconf:capability:candidate:1.0",

"capability-origin": "device-advertised"

},

{

"capability": "urn:ietf:params:netconf:base:1.0",

"capability-origin": "device-advertised"

},

{

"capability": "(urn:ietf:params:xml:ns:yang:ietf-inet-types?revision=2013-07-15)ietf-inet-types",

"capability-origin": "device-advertised"

},

{

"capability": "(urn:opendaylight:yang:extension:yang-ext?revision=2013-07-09)yang-ext",

"capability-origin": "device-advertised"

},

{

"capability": "(urn:tech.pantheon.netconfdevice.network.topology.rpcs?revision=2018-03-20)network-topology-rpcs",

"capability-origin": "device-advertised"

},

{

"capability": "(urn:ietf:params:xml:ns:yang:ietf-yang-types?revision=2013-07-15)ietf-yang-types",

"capability-origin": "device-advertised"

},

{

"capability": "(urn:TBD:params:xml:ns:yang:network-topology?revision=2013-10-21)network-topology",

"capability-origin": "device-advertised"

},

{

"capability": "(urn:ietf:params:xml:ns:yang:ietf-netconf-monitoring?revision=2010-10-04)ietf-netconf-monitoring",

"capability-origin": "device-advertised"

},

{

"capability": "(urn:opendaylight:netconf-node-optional?revision=2019-06-14)netconf-node-optional",

"capability-origin": "device-advertised"

},

{

"capability": "(urn:ietf:params:xml:ns:netconf:notification:1.0?revision=2008-07-14)notifications",

"capability-origin": "device-advertised"

},

{

"capability": "(urn:opendaylight:netconf-node-topology?revision=2015-01-14)netconf-node-topology",

"capability-origin": "device-advertised"

}

]

},

"netconf-node-topology:host": "172.17.0.2",

"netconf-node-topology:port": 17830,

"netconf-node-topology:tcp-only": false,

"netconf-node-topology:keepalive-delay": 0

}

]

}

5. Find the leader and delete it

5.1 Show all pods. This returns the names of each pod.

microk8s.kubectl get pods

5.2 Find the leader by IP address

microk8s.kubectl get pod [NAME] -o custom-columns=":status.podIP" | xargs

In step 4., we found which IP address was assigned to the leader. Use the command above to find the name of this pod, using its IP address.

5.3 Delete the pod.

microk8s.kubectl delete -n default pod [LEADER_NAME]

5.4 Verify that the pod is terminating

microk8s.kubectl get pods

You should see the old leader with status “Terminating” and a new pod running, which will replace it.

6. Verify, that a new leader was elected

curl --request GET 'http://[HOST_IP]:8558/cluster/members/'

{

"leader": "akka://opendaylight-cluster-data@10.1.101.178:2552",

"members": [

{

...

As a result of deleting the pod, a new leader was elected from the previous pods.

7. Verify that the new pod also contains the device. The response should be the same as in step 4.

curl --request GET 'http://[NEW_MEMBER_IP]:8888/restconf/data/network-topology:network-topology/topology=topology-netconf/node=new-node'

8. Delete the device

curl --request DELETE 'http://[HOST_IP]:8888/restconf/data/network-topology:network-topology/topology=topology-netconf/node=new-node'

9. Verify that the testing device was removed from all cluster members

curl --request GET 'http://[MEMBER_1_IP]:8888/restconf/data/network-topology:network-topology/topology=topology-netconf' curl --request GET 'http://[MEMBER_1_IP]:8888/restconf/data/network-topology:network-topology/topology=topology-netconf' ...

Your response should look like this:

{

"network-topology:topology": [

{

"topology-id": "topology-netconf"

}

]

}

10. Logs from the device can be shown by executing this command:

sudo docker logs [CONTAINER ID]

11. Logs from the lighty.io RNC app can be shown by executing the following command:

microk8s.kubectl logs [POD_NAME]

This post explained how to start a containerized, lighty.io RNC application and configure it to use clustering. Furthermore, an explanation of how to make your own cluster configuration either from a file or using –set flag was shown, to set the size of the cluster to your liking. Rescaling of the cluster and connecting a simulator device to the cluster was also shown and explained.

by Tobiáš Pobočík & Peter Šuňa | Leave us your feedback on this post!

Explore our PANTHEON.tech GitHub.

Watch our YouTube Channel.

![[What Is] VLAN & VXLAN](https://pantheontech1.b-cdn.net/wp-content/uploads/2025/04/vxlan-vpp-thumb-400x250.png)

![[What Is] Whitebox Networking?](https://pantheontech1.b-cdn.net/wp-content/uploads/2025/03/whitebox-net-thumb-400x250.png)

![[What Is] BGP EVPN?](https://pantheontech1.b-cdn.net/wp-content/uploads/2025/03/bgp-evpn-thumb-400x250.png)