[OpenDaylight] Migrating Bierman RESTCONF to RFC 8040

The RESTCONF protocol implementation draft-bierman-netconf-restconf-02, named after A. Bierman, is HTTP-based and enables manipulating with YANG-defined data sets using a programmatic interface. It relies on the same datastore concepts as NETCONF, with modifications to enable HTTP-based CRUD operations.

Learn how to migrate from the legacy ‘draft-bierman-netconf-restconf-02‘ to RFC8040 in OpenDaylight.

NETCONF vs. RESTCONF

While NETCONF uses SSH for network device management, RESTCONF supports secure HTTP access (HTTPS). RESTCONF also allows for easy automation through a RESTful API, where the syntax of the datastore is defined in YANG.

YANG is a data modeling language used for model configuration – such as state data, or administrative actions. PANTHEON.tech offers an open-source tool for verifying YANG data in OpenDaylight or lighty.io, as well as an IntelliJ plugin called YANGinator.

What is YANG?

The YANG data modeling language is widely viewed as an essential tool for modeling configuration and state data manipulated over NETCONF, RESTCONF, or gNMI.

RESTCONF/NETCONF Architecture

NETCONF defines configuration datastores and a set of CRUD operations (create, retrieve, update, delete). RESTCONF does the same but adheres to REST API & HTTPS compatibility.

The importance of RESTCONF lies therefore within its programmability and flexibility in network configurations automation use-cases.

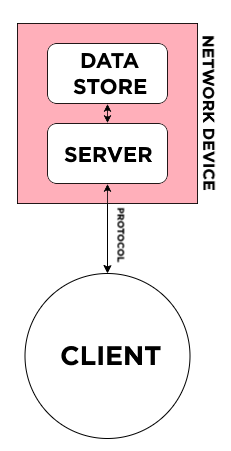

By design, the architecture of this communication looks the same – a network device, composed of a data store (in YANG) and server (RETCONF or NETCONF), communicates with the target client through a protocol (RESTCONF or NETCONF):

RESTCONF/NETCONF client communication flow.

RESTful API

REST is a generally established set of rules for establishing stateless, dependable online APIs. RESTful is an informal term for this web API, that follows the REST requirements.

RESTful APIs are primarily built on HTTP protocols for accessing resources via URL-encoded parameters and data transmission, using JSON or XML.

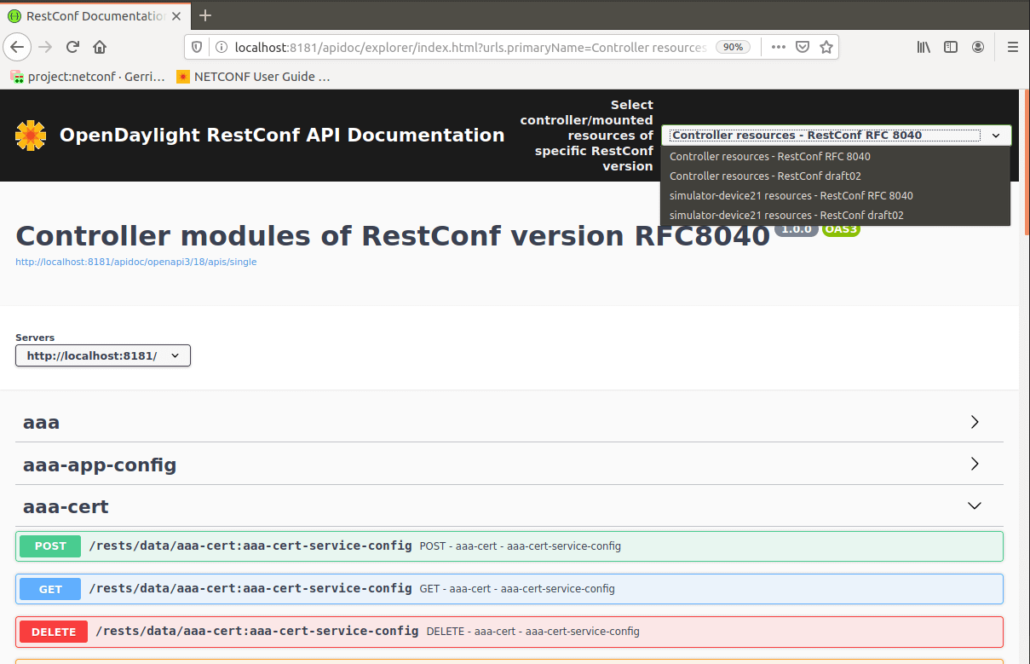

OpenDaylight was one of the early adopters of the RESTCONF protocol. For increased compatibility, two RESTCONF implementations are supported today – the legacy draft-bierman-netconf-restconf-02 & RFC8040.

What’s New in RFC8040?

The biggest, newest difference in the RFC8040 implementation of RESTCONF, in comparison to the legacy Bierman implementation, is the transition to YANG 1.1 support.

YANG 1.1 introduces a new type of RPC operation, called actions. These actions enable RPC operations to be attached to selected nodes in the data schema. YANG Library is a set of YANG modules with their revisions, features, and other rewritten functions.

Other new features include fresh XPath functions, an option to define asynchronous notifications (with schema nodes), and more. For a more detailed insight, we recommend reading this comprehensive list of changes.

Migration from Legacy RESTCONF Implementation

Since the RFC8040 RESTCONF implementation is now in General Availability and ready to replace the legacy Bierman draft, PANTHEON.tech has decided to stop supporting the draft implementation and help customers with migration.

Contact PANTHEON.tech for support in migrating RESTCONF implementations from draft-bierman-netconf-restconf-02 to RFC8040.