SONiC Enterprise Data Center Orchestration

/in Blog, News /by Pantheon.techSandWork is now Generally Available

SONiC (Software for Open Networking in the Cloud) is a popular open-source network operating system that has become increasingly popular in recent years. This powerful and flexible platform is used by hyperscalers, large enterprises and cloud providers to build their data centers’ networks.

SONiC was originally developed as a network operating system for large-scale data center environments. It is an open-source project, originally developed by Microsoft and is now gaining popularity in recent years due to its flexibility, scalability, and extensibility with a lot of contributions from many large organizations and individual contributors.

Another key benefit of SONiC is its support for multi-vendor environments. Unlike many proprietary network operating systems, SONiC is vendor-agnostic and can be used with a wide range of network hardware from different manufacturers. This enables enterprises to choose the best network hardware for their needs, without being tied to a single vendor’s solutions.

In addition, SONiC is highly secure, with built-in support for secure communication, role-based access control, and other security features. This makes it an ideal platform for enterprises that require robust security measures to protect their data center infrastructure.

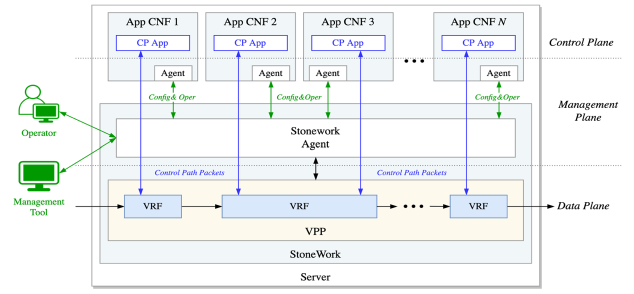

To maximize and build upon the benefits of SONiC in the Data Centre, PANTHEON.tech have leveraged their open source heritage and experience in Network automation and Software Defined Networking to develop SandWork, which is now generally available.

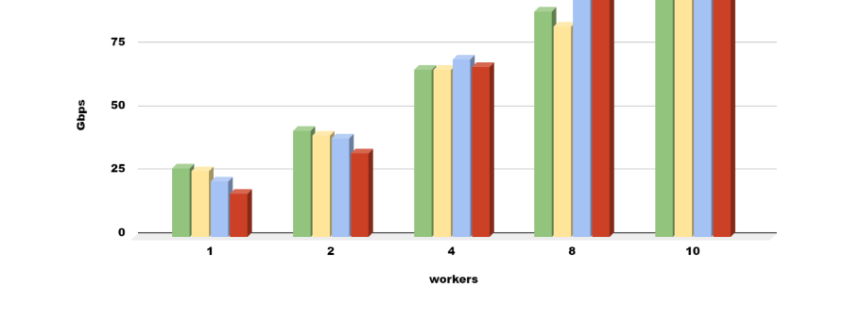

One of the key benefits of SandWork is its ability to orchestrate network infrastructure in the enterprise data center. SandWork provides a programmable framework and intuitive user interface that enables network administrators to automate the configuration and management of datacenter network devices. This significantly reduces the time and effort required to manage the data center network fabric, while also increasing efficiency and scalability.

SandWork uses a modular design that enables administrators to customize the platform to meet their specific needs. It provides a rich set of APIs and tools that enable developers to build their own applications and scripts to automate network management tasks. This approach makes SandWork highly extensible and adaptable, making it an ideal solution for complex and dynamic data center environments.

SandWork is also highly scalable, supporting large-scale data center environments with thousands of network devices. It provides advanced features for managing network infrastructure. This makes it easier for network administrators to identify and troubleshoot issues in the data center, ensuring maximum uptime and availability.

Overall, SandWork is a powerful and flexible platform for managing and orchestrating enterprise data centers. With its advanced features for network management and security, SandWork is a valuable tool for any organization looking to optimize their data center operations.

by the PANTHEON.tech Team | Leave us your feedback on this post!

You can contact us here!

Explore our PANTHEON.tech GitHub.

Watch our YouTube Channel.

[lighty.io] Create & Use Containerized RNC App w/ Clustering

/in Blog /by Pantheon.techThe lighty.io RESTCONF-NETCONF (RNC) application allows to easily initialize, start and utilize the most used OpenDaylight services, including clustering and optionally add custom business logic. PANTHEON.tech provides a pre-prepared Helm chart inside the lighty.io RNC application, which can be easily used for Kubernetes deployment.

Clustering is a mechanism that enables multiple processes and programs to work together as one entity.

For example, when you search for something on google.com, it may seem like your search request is processed by only one web server. In reality, your search request is processed by many web servers connected in a cluster. Similarly, you can have multiple instances of OpenDaylight working together as one entity.

The advantages of clustering are:

- Scaling: If you have multiple instances of OpenDaylight running, you can potentially do more work and store more data than you could with only one instance. You can also break up your data into smaller chunks (shards) and either distribute that data across the cluster or perform certain operations on certain members of the cluster.

- High Availability: If you have multiple instances of OpenDaylight running and one of them crashes, you will still have the other instances working and available.

- Data Persistence: You will not lose any data stored in OpenDaylight after a manual restart or a crash.

This article demonstrates, how to configure the lighty.io RNC application to use clustering, rescaling of the cluster and connecting a device to the cluster.

Add the PANTHEON.tech Helm Repository to MicroK8

For deploying the lighty.io RNC application, we will use and show how to install the microk8s Local Kubernetes engine. Feel free to use any other of your favorite local Kubernetes engines, which you have installed.

1. Install microk8s with snap.

sudo snap install microk8s --classic sudo usermod -a -G microk8s $USER sudo chown -f -R $USER ~/.kube

2. Enable the required add-ons.

microk8s enable dns helm3

3. Add the PANTHEON.tech Helm repository to microk8s.

microk8s.helm3 repo add pantheon-helm-repo https://pantheontech.github.io/helm-charts/

4. Update the repository.

microk8s.helm3 repo update

Configuring your lighty.io RNC Clustering app

Before we demonstrate how our cluster functions, we need to properly configure the lighty.io RNC app.

The lighty.io RNC application could be configured through Helm values file. The default RNC app values.yaml file can be found inside the lighty.io GitHub.

1. Set up the configuration using –set flag, to enable clustering, using:

- lighty.replicaCount= 3 // This allows you to configure the size of the cluster.

- lighty.akka.isSingleNode=false // If true, akka configuration would be overwritten with default configuration.

- nodePort.useNodePort=false // To use Clustering service, rather than NodePort service.

- lighty.moduleTimeOut=120 // Cluster takes some time to deploy, set time out to higher value.

*Note: lighty.akka.isSingleNode is required to be set to false, when using clustering.

microk8s.helm3 install lighty-rnc-app pantheon-helm-repo/lighty-rnc-app-helm --version 16.1.0 --set lighty.replicaCount=3,lighty.akka.isSingleNode=false,nodePort.useNodePort=false,lighty.moduleTimeOut=120

To modify a running configuration after deploying the app, just change the “install” to “upgrade”.

1.1 Verify, that the lighty-rnc-app is deployed.

microk8s.helm3 ls

Afterwards, you should see the lighty-rnc-app with status “deployed”:

2. Set up the configuration, using configured values.yaml file.

2.1 Download the values.yaml file from lighty-core.

2.2 Update the image to your desired version, for example:

image: name: ghcr.io/pantheontech/lighty-rnc version: 16.1.0 pullPolicy: IfNotPresent

2.3 Update the values.yaml file using:

- lighty.replicaCount= 3 // This allows you to configure the size of the cluster.

- lighty.akka.isSingleNode=false // If true, akka configuration would be overwritten with default configuration.

- nodePort.useNodePort=false // To use ClusterIp service rather than NodePort service

- lighty.moduleTimeOut=120 // Cluster takes some time to deploy. Set time out to higher value.

Note: lighty.akka.isSingleNode is required to be false when using clustering.

2.4 Deploy the lighty.io RNC app with changed values.yaml file.

microk8s.helm3 install lighty-rnc-app pantheon-helm-repo/lighty-rnc-app-helm --version 16.1.0 --values [VALUES_YAML_FILE]

To modify a running configuration after deploying the app, just change the “install” to “upgrade”.

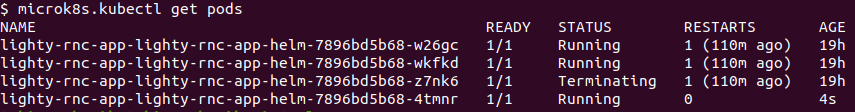

3. Verify that all pods started. You should see as many pods, as you set the value of replicaCount.

microk8s.kubectl get pods

Create a testing device w/ lighty.io NETCONF Simulator

For testing purposes, we will need some devices. The ideal tool in this case is the lighty.io NETCONF Simulator. We will use this device and start it inside a Docker container. A Docker file can be found inside lighty.io, which can create an image for this simulated device.

1. Download the lighty.io NETCONF Simulator Docker file to a separate folder.

2. Create a Docker image from the Docker file.

sudo docker build -t lighty-netconf-simulator .

3. Start the Docker container with a testing device at Port 17830 (or any other port), by changing the -p parameter.

sudo docker run -d --rm --name netconf-simulator -p17830:17830 lighty-netconf-simulator:latest

4. Check the IP address, assigned to the Docker container. This parameter will be used as the DEVICE_IP parameter in requests.

docker inspect -f '{{range.NetworkSettings.Networks}}{{.IPAddress}}{{end}}' netconf-simulator

Clustering in the lighty.io RNC App

As mentioned at the beginning of this tutorial, the key to understanding clustering is to see many of the same instances as one functioning entity.

To demonstrate that our cluster in lighty.io RNC is alive and well, we will demonstrate its “keep alive” function. This shows, that when we manually remove the leader from a cluster, the entire system will not crumble, but hold an election and elect another leader. In practical terms – a cluster will continue to function, even when a leader is terminated/down, by self-sufficiently electing a new one and therefore showing, that it functions as an entity, or cluster.

1. Show IP addresses of all pods.

microk8s.kubectl get pods -l app.kubernetes.io/name=lighty-rnc-app-helm -o custom-columns=":status.podIP"

2. Use one of the IP addresses from the previous step, to view members of the cluster.

curl --request GET 'http://[HOST_IP]:8558/cluster/members/'

Your response should look like this:

{

"leader": "akka://opendaylight-cluster-data@10.1.101.177:2552",

"members": [

{

"node": "akka://opendaylight-cluster-data@10.1.101.177:2552",

"nodeUid": "4687308041747729846",

"roles": [

"member-10.1.101.177",

"dc-default"

],

"status": "Up"

},

{

"node": "akka://opendaylight-cluster-data@10.1.101.178:2552",

"nodeUid": "-29348997399314594",

"roles": [

"member-10.1.101.178",

"dc-default"

],

"status": "Up"

}

],

"oldest": "akka://opendaylight-cluster-data@10.1.101.177:2552",

"oldestPerRole": {

"member-10.1.101.177": "akka://opendaylight-cluster-data@10.1.101.177:2552",

"dc-default": "akka://opendaylight-cluster-data@10.1.101.177:2552",

"member-10.1.101.178": "akka://opendaylight-cluster-data@10.1.101.178:2552"

},

"selfNode": "akka://opendaylight-cluster-data@10.1.101.177:2552",

"unreachable": []

}

In the response, you should see which member was elected as the leader, as well as all other members.

- Tip: Use Postman for better response readability

3. Add the device to one of the members.

curl --request PUT 'http://[HOST_IP]:8888/restconf/data/network-topology:network-topology/topology=topology-netconf/node=new-node' \

--header 'Content-Type: application/json' \

--data-raw '{

"netconf-topology:node": [

{

"node-id": "new-node",

"host": [DEVICE_IP],

"port": 17830,

"username": "admin",

"password": "admin",

"tcp-only": false,

"keepalive-delay": 0

}

]

}

4. Verify that the device was added to all members of the cluster.

curl --request GET 'http://[MEMBER_1_IP]:8888/restconf/data/network-topology:network-topology/topology=topology-netconf/node=new-node' curl --request GET 'http://[MEMBER_2_IP]:8888/restconf/data/network-topology:network-topology/topology=topology-netconf/node=new-node' ...

Every member of the cluster should return the same device, with the same values:

{

"network-topology:node": [

{

"node-id": "new-node",

"netconf-node-topology:connection-status": "connected",

"netconf-node-topology:username": "admin",

"netconf-node-topology:password": "admin",

"netconf-node-topology:available-capabilities": {

"available-capability": [

{

"capability": "urn:ietf:params:netconf:base:1.1",

"capability-origin": "device-advertised"

},

{

"capability": "urn:ietf:params:netconf:capability:notification:1.0",

"capability-origin": "device-advertised"

},

{

"capability": "urn:ietf:params:netconf:capability:candidate:1.0",

"capability-origin": "device-advertised"

},

{

"capability": "urn:ietf:params:netconf:base:1.0",

"capability-origin": "device-advertised"

},

{

"capability": "(urn:ietf:params:xml:ns:yang:ietf-inet-types?revision=2013-07-15)ietf-inet-types",

"capability-origin": "device-advertised"

},

{

"capability": "(urn:opendaylight:yang:extension:yang-ext?revision=2013-07-09)yang-ext",

"capability-origin": "device-advertised"

},

{

"capability": "(urn:tech.pantheon.netconfdevice.network.topology.rpcs?revision=2018-03-20)network-topology-rpcs",

"capability-origin": "device-advertised"

},

{

"capability": "(urn:ietf:params:xml:ns:yang:ietf-yang-types?revision=2013-07-15)ietf-yang-types",

"capability-origin": "device-advertised"

},

{

"capability": "(urn:TBD:params:xml:ns:yang:network-topology?revision=2013-10-21)network-topology",

"capability-origin": "device-advertised"

},

{

"capability": "(urn:ietf:params:xml:ns:yang:ietf-netconf-monitoring?revision=2010-10-04)ietf-netconf-monitoring",

"capability-origin": "device-advertised"

},

{

"capability": "(urn:opendaylight:netconf-node-optional?revision=2019-06-14)netconf-node-optional",

"capability-origin": "device-advertised"

},

{

"capability": "(urn:ietf:params:xml:ns:netconf:notification:1.0?revision=2008-07-14)notifications",

"capability-origin": "device-advertised"

},

{

"capability": "(urn:opendaylight:netconf-node-topology?revision=2015-01-14)netconf-node-topology",

"capability-origin": "device-advertised"

}

]

},

"netconf-node-topology:host": "172.17.0.2",

"netconf-node-topology:port": 17830,

"netconf-node-topology:tcp-only": false,

"netconf-node-topology:keepalive-delay": 0

}

]

}

5. Find the leader and delete it

5.1 Show all pods. This returns the names of each pod.

microk8s.kubectl get pods

5.2 Find the leader by IP address

microk8s.kubectl get pod [NAME] -o custom-columns=":status.podIP" | xargs

In step 4., we found which IP address was assigned to the leader. Use the command above to find the name of this pod, using its IP address.

5.3 Delete the pod.

microk8s.kubectl delete -n default pod [LEADER_NAME]

5.4 Verify that the pod is terminating

microk8s.kubectl get pods

You should see the old leader with status “Terminating” and a new pod running, which will replace it.

6. Verify, that a new leader was elected

curl --request GET 'http://[HOST_IP]:8558/cluster/members/'

{

"leader": "akka://opendaylight-cluster-data@10.1.101.178:2552",

"members": [

{

...

As a result of deleting the pod, a new leader was elected from the previous pods.

7. Verify that the new pod also contains the device. The response should be the same as in step 4.

curl --request GET 'http://[NEW_MEMBER_IP]:8888/restconf/data/network-topology:network-topology/topology=topology-netconf/node=new-node'

8. Delete the device

curl --request DELETE 'http://[HOST_IP]:8888/restconf/data/network-topology:network-topology/topology=topology-netconf/node=new-node'

9. Verify that the testing device was removed from all cluster members

curl --request GET 'http://[MEMBER_1_IP]:8888/restconf/data/network-topology:network-topology/topology=topology-netconf' curl --request GET 'http://[MEMBER_1_IP]:8888/restconf/data/network-topology:network-topology/topology=topology-netconf' ...

Your response should look like this:

{

"network-topology:topology": [

{

"topology-id": "topology-netconf"

}

]

}

10. Logs from the device can be shown by executing this command:

sudo docker logs [CONTAINER ID]

11. Logs from the lighty.io RNC app can be shown by executing the following command:

microk8s.kubectl logs [POD_NAME]

This post explained how to start a containerized, lighty.io RNC application and configure it to use clustering. Furthermore, an explanation of how to make your own cluster configuration either from a file or using –set flag was shown, to set the size of the cluster to your liking. Rescaling of the cluster and connecting a simulator device to the cluster was also shown and explained.

by Tobiáš Pobočík & Peter Šuňa | Leave us your feedback on this post!

Explore our PANTHEON.tech GitHub.

Watch our YouTube Channel.

[What Is] Multus CNI

/in Blog, CDNF.io /by PANTHEON.techMultus CNI (Container Network Interface) is a novel approach to managing multiple CNIs in your container network (Kubernetes). Based on its name, which means multiple in Latin, Multus is an open-source plug-in, which serves as an additional layer in a container network, to enable multi-interface support. For example, Virtual Network Functions (VNFs) often depend on connectivity towards multiple network interfaces.

The CNI project itself, backed by the Cloud Native Computing Foundation, defines a minimum specification on what a common interface should look like. The CNI project consists of three primary components:

- Specification: an API that lies between the network plugins and runtimes

- Plugins: depending on use-cases, they help set up the network

- Library: CNI specifications as Go implementations, which are then utilized by runtimes

Each CNI can deliver different results, which makes Multus a wonderful plugin to manage these functionalities and make them work together.

Multus delivers this functionality in form of a contact between the container runtime and a selection of plugins, which are called upon to do the actual net configuration tasks.

Multus Characteristics

- Manage contact between container runtime and plugins

- No net configuration by itself (dependent on other plugins)

- Uses Flannel to group plugins into delegates

- Support for reference & 3rd party plugins

- Supports SRIOV, DPDK, OVS-DPDK & VPP workloads with cloud-native & NFV based applications

Multus Plugin Support & Management

Currently, Multus supports all plugins maintained in the official CNI repository, as well as 3rd party plugins like Contiv, Cilium or Calico.

Management of plugins done by handling plugins as delegates (using Flannel), which can be invoked into a certain sequence, based on either a JSON scheme or CNI configuration. Flannel is an overlay network in Kubernetes, which configures layer 3 network fabric and therefore satisfies Kubernetes requirements (run by default on many plugins). Multus then invokes the eth0 interface in the pod for the primary/master plugin, while the rest of the plugins receive netx interfaces (net0, net1, etc.).

StoneWork in K8s Pod with Multiple Interfaces

Our team created a demo on how to run StoneWork in a Microk8s pod with multiple interfaces attached via the Multus add-on.

This example attaches two existing host interfaces to the Stonework container running on a Microk8s pod. Highlights include the option to add multiple DPDK interfaces, as well as multiple af_packet interfaces to StoneWork with this configuration.

If you are interested in more details regarding this implementation, contact us for more information!

Utilizing Cloud-Native Network Functions

If you are interested in high-quality CNFs for your next or existing project, make sure to check out CDNF.io – a portfolio of cloud-native network functions, by PANTHEON.tech.

Leave us your feedback on this post!

Explore our PANTHEON.tech GitHub.

Watch our YouTube Channel.

[OpenDaylight] Migrating Bierman RESTCONF to RFC 8040

/in Blog, OpenDaylight /by Pantheon.techThe RESTCONF protocol implementation draft-bierman-netconf-restconf-02, named after A. Bierman, is HTTP-based and enables manipulating with YANG-defined data sets using a programmatic interface. It relies on the same datastore concepts as NETCONF, with modifications to enable HTTP-based CRUD operations.

Learn how to migrate from the legacy ‘draft-bierman-netconf-restconf-02‘ to RFC8040 in OpenDaylight.

NETCONF vs. RESTCONF

While NETCONF uses SSH for network device management, RESTCONF supports secure HTTP access (HTTPS). RESTCONF also allows for easy automation through a RESTful API, where the syntax of the datastore is defined in YANG.

YANG is a data modeling language used for model configuration – such as state data, or administrative actions. PANTHEON.tech offers an open-source tool for verifying YANG data in OpenDaylight or lighty.io, as well as an IntelliJ plugin called YANGinator.

What is YANG?

The YANG data modeling language is widely viewed as an essential tool for modeling configuration and state data manipulated over NETCONF, RESTCONF, or gNMI.

RESTCONF/NETCONF Architecture

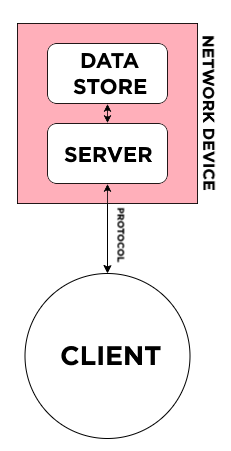

NETCONF defines configuration datastores and a set of CRUD operations (create, retrieve, update, delete). RESTCONF does the same but adheres to REST API & HTTPS compatibility.

The importance of RESTCONF lies therefore within its programmability and flexibility in network configurations automation use-cases.

By design, the architecture of this communication looks the same – a network device, composed of a data store (in YANG) and server (RETCONF or NETCONF), communicates with the target client through a protocol (RESTCONF or NETCONF):

RESTCONF/NETCONF client communication flow.

RESTful API

REST is a generally established set of rules for establishing stateless, dependable online APIs. RESTful is an informal term for this web API, that follows the REST requirements.

RESTful APIs are primarily built on HTTP protocols for accessing resources via URL-encoded parameters and data transmission, using JSON or XML.

OpenDaylight was one of the early adopters of the RESTCONF protocol. For increased compatibility, two RESTCONF implementations are supported today – the legacy draft-bierman-netconf-restconf-02 & RFC8040.

What’s New in RFC8040?

The biggest, newest difference in the RFC8040 implementation of RESTCONF, in comparison to the legacy Bierman implementation, is the transition to YANG 1.1 support.

YANG 1.1 introduces a new type of RPC operation, called actions. These actions enable RPC operations to be attached to selected nodes in the data schema. YANG Library is a set of YANG modules with their revisions, features, and other rewritten functions.

Other new features include fresh XPath functions, an option to define asynchronous notifications (with schema nodes), and more. For a more detailed insight, we recommend reading this comprehensive list of changes.

Migration from Legacy RESTCONF Implementation

Since the RFC8040 RESTCONF implementation is now in General Availability and ready to replace the legacy Bierman draft, PANTHEON.tech has decided to stop supporting the draft implementation and help customers with migration.

Contact PANTHEON.tech for support in migrating RESTCONF implementations from draft-bierman-netconf-restconf-02 to RFC8040.

[Opinion] Why Open-Source Matters

/in Blog /by PANTHEON.techPANTHEON.tech is an avid open-source supporter. As the largest contributor to the OpenDaylight source-code, as well as being active in many more open-source projects and creating our own, we believe that open software is a way of enriching collaboration and making better products overall.

Open-source is also a philosophy of freedom, meaningfulness, and the idea that wisdom should be shared.

Practical consequences of software being open are far-reaching and have great significance – much bigger than an uninvolved observer would guess at a first glance. To see the consequences, you need to take a look at a big enough project. Or even better, at an entire infrastructure, consisting of open-source projects.

Collaboration in Open-Source

A big advantage open-source brings is collaboration. This might sound obvious, but it has several forms:

- The collaboration between companies, even competitors, contributing their own piece to the common open code, results in multiple-times better products, compared to standalone work.

- The collaboration of academics. The best results were always done with academic precision.

Research & Academics in Open-Source

Researchers prefer to spend their valuable time working on open code and open research. Some run their state-of-art static or formal analysis tools as part of their research upon open-source projects and then send patches.

Some design well-defined APIs, as for example UNIX POSIX. Others solve known problems using open-source platforms as a base, for example, NixOS, which solves so many problems with packaging.

But why do academics prefer open code? Not only because open code is more accessible. Some of them prefer open code, due to a philosophical point of view – since a code is similar to a mathematical equation or other scientific findings, it should be published publically.

A code should be perfect, consistent, and bug-free. That’s why collaboration with people having this mindset is invaluable.

Community & Collaboration

Community collaboration. There is no small amount of excellent engineers contributing to open-source in their free time either for fun, own needs, belief in open source philosophy, or to help other people and seeking sense in their work.

Informal collaboration – mailing lists, IRC channels, Stack Overflow, blogs, and forums. Everyone who developed a closed-sourced project knows what I am talking about. How much easier is it to find needed information about an open-source project, whether it is a guide, documentation, explanation of error message, or bug.

This makes work on open-source projects much more effective and simple.

Verification in Open-Source

The verification side of collaboration is crucial. The amount of eyeballs controlling your code, again and again, is a huge advantage, compared to the closed-source way of a one or two-person, one-time review. For the same reason, Linus Torvalds said:

I made it publicly available but I had no intention to use the open-source methodology, I just wanted to have comments on the work.

Different people have different ideas and points of view. The involvement of lots of computer science experts makes the results far more promising and objective.

The overall time diverse people spend working on open-source is much bigger than the costs any corporation would be willing to pay for their closed source equivalent.

But there is much more about open source than just better collaboration. Open-source is also a philosophy of freedom, meaningfulness, and the idea that wisdom should be shared.

People would rather create something that makes sense and that might help other people, as we are social beings. Also, using something without the necessity of being bound in any way.

Imagine a Closed World

Now, just for contradiction, let’s imagine everything was closed – even standards and protocols in one big monopoly.

You would be forced to use only their technology, to buy services or updates to be able to work as usual. And what’s worse? What if the company stops to deliver or support something, you really need? For example, formatting of your important documents.

Kind of scary. Let’s rather imagine an open world, where absolutely everything is done in an open-source manner, even cars, electrical appliances, everything. I believe that now you got the importance of open-source philosophy as well.

Open source is the future. It’s modern, and it’s the right way to go from many points of view. Even big players who initially didn’t like the idea are now involved, at least partially.

But how can we adapt to these changes? How it is possible to be in business with open-source?

Stay tuned for Part 2, where we might touch on this topic.

by Július Milan | Leave us your feedback on this post!

Explore our PANTHEON.tech GitHub.

Watch our YouTube Channel.

[StoneWork] IS-IS Feature

/in Blog /by PANTHEON.techPANTHEON.tech continues to develop its Cloud-Native Network Functions portfolio with the recent inclusion of Intermediate System-to-Intermediate System (IS-IS) routing support, based on FRRouting. This inclusion complements and augments our current Stonework Enterprise routing offerings, providing customers with a choice of the usual networking vendors’ solutions.

Leveraging FRRouting, a Linux Foundation, open-source, industry-leading project, PANTHEON.tech provides a comprehensive suite of routing options. These include OSPF, BGP, and now IS-IS, which can fully integrate and interoperate with existing, or new, networking requirements.

As a cloud-native network function, our solution is designed to maximize container-based technologies and micro-services architecture.

We provide IS-IS feature to our customers with the following options:

- IS-IS CNF – Standalone CNF appliance with IS-IS support

- Stonework Enterprise – Security, switching, and routing features, now with IS-IS support

StoneWork Enterprise & IS-IS Integration

The control plane is based on a Ligato agent, which configures every aspect of FRR. The two protocols, OSPF & IS-IS, are running on separate daemons. Information (routes) are stored in Zebra (FRR IP routing manager), which translates these routes to the Linux kernel, towards the default Linux routing table.

The data plane forwards this information via a TAP tunnel, towards a VPP instance (supporting IS-IS & OSPF), which together with another Ligato agent in the StoneWork container enables OSPF & IS-IS functionality in a FRRouting, cloud-native network function.

With the power of containerization and enterprise-grade routing protocols, StoneWork Enterprise enables network service providers to easily get on board with cloud-native acceleration and enjoy all of its benefits.

What is FRRouting?

FRRouting (FRR) is a completely open-source internet routing protocol suite, with support for BGP, OSPF, OpenFabric, and more. FRR provides IP routing services, routing & policy decisions, and general exchange of routing information with other routers. Its incredible speed is achieved by installing routing decisions directly into the OS kernel. FRR supports a wide range of L3 configurations and dynamic routing protocols, making it a flexible and lightweight choice for a variety of deployments.

The magic of FRR lies within its natural ability to integrate with the Linux/Unix IP networking stack. This in turn allows for the development of networking use-cases – be it LAN switching & routing, internet access routers or peering, and even connecting hosts/VMs/containers to a network. It is hosted as a Linux Foundation collaborative project.

What is the IS-IS protocol?

The Intermediate System to Intermediate System is one of the most commonly deployed routing protocols across large network service providers and enterprises.

Specifically, it is an interior gateway protocol (IGP), used for exchanging router information within an autonomous system. IS-IS operates over L2 and doesn’t require IP connectivity and provides more security. It is more flexible and scalable than the OSPF protocol.

While different use-cases might require a substitution for IS-IS, our StoneWork Enterprise solution has recently enabled IS-IS integration as part of FRRouting, including a possible IS-IS CNF (Cloud Native Network Function) – standalone appliance.

Buy StoneWork Enterprise today!

If you are interested in StoneWork Enterprise, make sure to contact us today for a free, introductory consultation!

StoneWork is a high-performance, all-(CNFs)-in-one network solution.

Thanks to its modular architecture, StoneWork dynamically integrates all CNFs from our portfolio. A configuration-dependent startup of modules provides feature-rich control plane capabilities by preserving a single, high-performance data plane.

This way, StoneWork achieves the best-possible resource utilization, unified declarative configuration, and re-use of data paths for packet punting between cloud-native network functions. Due to utilizing the FD.io VPP data plane and container orchestration, StoneWork shows excellent performance, both in the cloud and bare-metal deployments.

by PANTHEON.tech | Leave us your feedback on this post!

You can contact us here!

Explore our PANTHEON.tech GitHub.

Watch our YouTube Channel.

[lighty.io RNC] Create & Use Containerized RESTCONF-NETCONF App

/in Blog /by PANTHEON.techThe lighty.io RNC (RESTCONF-NETCONF) application allows to easily initialize, start and utilize the most used OpenDaylight services and optionally add custom business logic.

lighty.io RNC has been recently used in the first-ever production deployment of ONAP, by Orange.

This pre-packed container image served as a RESTCONF – NETCONF bridge for communication between the ONAP component CDS and Cisco® NSO.

Inside the app, we provide a pre-prepared Helm chart that can be easily used for Kubernetes deployment. This tutorial explains the step-by-step deployment of the lighty.io RNC application with Helm 2/3 and a custom, local Kubernetes engine.

Read the complete tutorial after subscribing:

You will learn how to deploy the lighty.io RNC app via Helm 2 or Helm 3. While developers might still prefer to use HELM2, we have prepared scenarios for deployment in both versions of this Kubernetes package manager.

It is up to you, which Kubernetes engine you will pick for this deployment. We will be using and going through the installation of the microk8s Local Kubernetes engine.

- Helm 2: Condition is to use k8s versions 1.21 or lower

- Helm 3: Condition is to use k8s version 1.22 and higher

Likewise, we will show you how to simulate NETCONF devices through another lighty.io tool. The tutorial will finish by showing you simple CRUD operations for testing the lighty.io RNC App.

Why lighty.io?

PANTHEON.tech’s enhanced OpenDaylight based software offers significant benefits in performance, agility, and availability.

As the ongoing lead contributor to Linux Foundation’s OpenDaylight, PANTHEON.tech develops lighty.io, with the ultimate goal of enhancing the network controller experience for a variety of use-cases.

lighty.io offers a modular and scalable architecture, allowing dev-ops greater flexibility to deploy only those modules and libraries that are required for each use-case. This means that lighty.io is not only more lightweight at run time, but also more agile at development and deployment time.

And since only the minimum required set of components and libraries is present in a deployment at runtime, stability is enhanced as well.

Due to its modular capability, lighty.io provides a stable & scalable network controller experience, ideal for production deployments, enabling the burgeoning multitude of 5G/Edge use cases.

Learn more by visiting the official homepage or contacting us!

by Peter Šuňa | Leave us your feedback on this post!

You can contact us here!

Explore our PANTHEON.tech GitHub.

Watch our YouTube Channel.

2021 | A Look Back

/in Blog /by PANTHEON.techJoin us in reminiscing and reminding you, what PANTHEON.tech has managed to create and participate in 2021.

Most significantly, PANTHEON.tech has celebrated 20 years of its existence.

We have participated in two conferences this year, validated our position within the OpenDaylight community, and expanded our product portfolio!

lighty.io: Releases & Tools

The lighty.io team at PANTHEON.tech has gone through several releases in 2021, as well as a bunch of tools to accompany it along the way!

lighty.io RESTCONF-NETCONF Controller

- Out-of-the-box

- Pre-packaged

- Microservice-ready application

Easily use for managing network elements in your SDN use case.

You can read more about the project on our dedicated GitHub readme, or in the in-depth article below:

lighty.io YANG Validator

Create, validate and visualize the YANG data model of their application, without the need to call any other external tool – just by using the lighty.io framework.

YANGinator | Validate YANG files in IntelliJ

Built for one of the most popular IDEs, YANGinator is a plugin for validating YANG files without leaving your IDEs window!

Visit the project on GitHub, or on the official marketplace.

Conferences & Events

PANTHEON.tech has sponsored and attended two conferences this year. Broadband World Forum in Amsterdam, and Open Networking & Edge Summit + Kubernetes on Edge Day, which was ultimately held in a virtual environment.

Our network controller SDK, lighty.io, was mentioned during the OCP Global Summit, as part of a SONiC Automation use-case.

lighty.io was also part of the first-ever, production deployment of ONAP by Orange.

News, Blogs & Use-Cases

We encourage you to read through over 28 original posts on our webpage. The topics range from explanations of recent network trends to interesting use-cases for enterprises.

Lanner Inc. has established a partnership with PANTHEON.tech to successfully validate StoneWork’s all-in-one Cloud-Native Functions solution on Lanner’s NCA-1515 uCPE Platforms.

PANTHEON.tech has been recognized as a member of the Intel Network Builders program Winners Circle.

Pantheon Fellow and one of the elite experts on OpenDaylight, Robert Varga, was invited for a podcast episode with the Broadband Bunch!

CDNF.io | A cloud-native portfolio

Our cloud-native network functions portfolio, CDNF.io, includes a combined data/control plane – StoneWork – and 18 CNFs.

Learn more about this expanding project by visiting its homepage, CDNF.io, or contact us directly to explore your options.

SandWork| Automate & Orchestrate Your Network

The ultimate network orchestration tool for your enterprise network. This Smart Automated Net/Ops & Dev/Ops platform orchestrates your networks with a user-friendly UI. Manage hundreds to thousands of individual nodes with open-source tech and enterprise support & integration.

Contact us to learn more about SandWork!

Newsletter

We wish our existing and future partners wonderful holidays and a great start towards the year 2022! Stay up-to-date with us and subscribe to our newsletter, so you don’t miss any news!

[lighty.io] Orange Deploys ONAP in Production

/in Blog /by PANTHEON.techOn the 11th of November, Orange hosted a webinar with The Linux Foundation, showcasing their production deployment of ONAP to automate their IP/MPLS transport network infrastructure.

Learn how to containerize and deploy your own lighty.io RNC instance in this tutorial!

PANTHEON.tech is extremely proud to see their OpenDaylight based lighty.io project, included in this, Orange’s first, successful production ONAP deployment. lighty.io is an open-source project developed and supported by PANTHEON.tech.

Open-Source Matters

Orange’s vision and target are to move from a closed IT architecture to an open platform architecture based on the Open Digital Framework, a blueprint produced by members of TMForum. ONAP provides ODP compliant functional blocks to cover the delivery of many use-cases like service provisioning of 5G transport, IP, fixed access, microwave, optical networks services, as well as CPE management, OS upgrades, and many others.

Why lighty.io?

The SDN-C is the network controller component of ONAP – managing, assigning, and provisioning network resources. It is based on OpenDaylight, with additional Directed Graph Execution capabilities. However, lighty.io, PANTHEON.tech’s enhanced OpenDaylight based software, offers significant benefits in performance, agility, and availability, taking SDN-C to another level.

OpenDaylight vs. lighty.io

As the ongoing lead contributor to Linux Foundation’s OpenDaylight, PANTHEON.tech develops lighty.io, with the ultimate goal of enhancing the network controller experience for a variety of use-cases.

With increased functionality to upgrade dependencies and packaged use-case-based applications, lighty.io brings Software-Defined Networking to a Cloud-Native environment.

lighty.io offers a modular and scalable architecture, allowing dev-ops greater flexibility to deploy only those modules and libraries that are required for each use-case. This means that lighty.io is not only more lightweight at run time, but also more agile at development and deployment time.

And since only the minimum required set of components and libraries is present in a deployment at runtime, stability is enhanced as well.

Due to its modular capability, lighty.io provides a stable & scalable network controller experience, ideal for production deployments, enabling the burgeoning multitude of 5G/Edge use cases.

A reduced codebase footprint and packaging makes lighty.io more:

- Lightweight

- Agile

- Stable

- Secure

- Scalable

PANTHEON.tech also provides other complementary tools, plugins, and additional features, like:

- Java Protocol libraries

- Network Simulators

- YANG Validators

- Pre-packaged, container use-case driven application, with Helm charts

Orange Egypt: ONAP Production Deployment

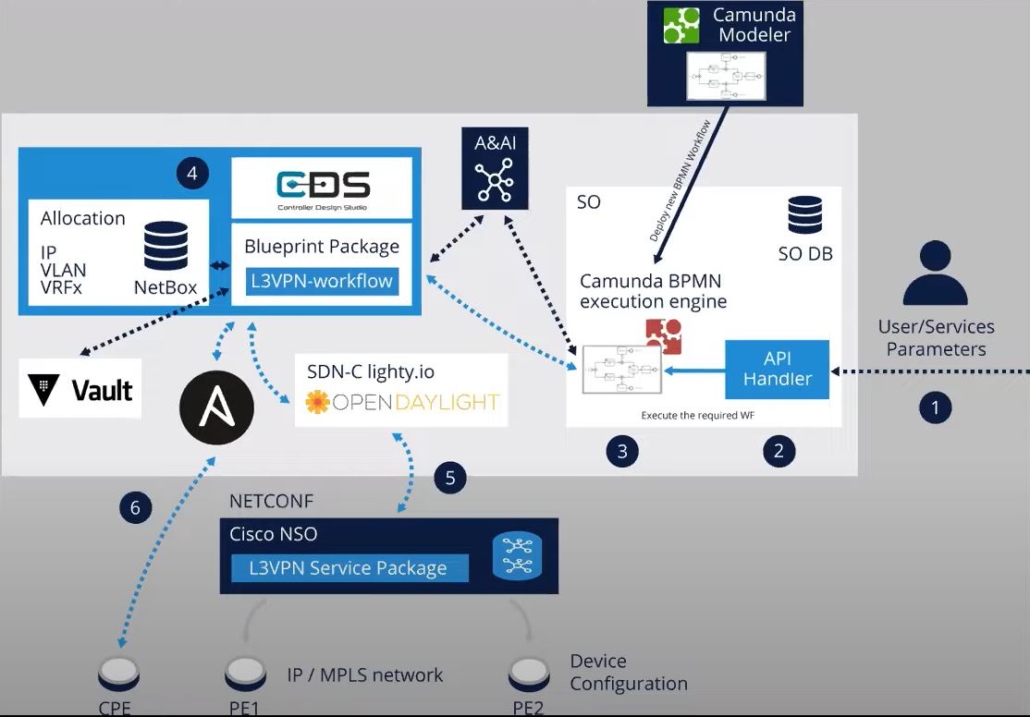

Orange Egypt uses ONAP as a northbound, higher-level orchestration, for L3VPN service provisioning.

The user leverages a GUI to enter network service parameters. The request is then submitted to the ONAP Service Orchestrator (SO), powered by Camunda‘s BPMN workflow engine, through a REST API and custom-designed workflow is executed.

Afterward, an instantiated ONAP SO workflow triggers the ONAP Controller Design Studio (CDS) using a REST API to run custom blueprint packages to start the provisioning of L3VPN network services.

In this production deployment, lighty.io is used for communication between CDS and Cisco NSO. The application used in the ONAP production deployment is lighty-RNC – a pre-packaged container image, providing a RESTCONF-NETCONF bridge.

CDS assigns required resources (IP + VLAN) from NETBOX and lighty.io secret from Vault. After successful allocation of needed resources by CDS, lighty.io then sends the information to Cisco NSO to configure devices via a NETCONF session.

Source: YouTube, LFN Webinar: Orange Deploys ONAP In Production

Future of ONAP

Orange demonstrated ONAP in an IP/MPLS backbone automation use-case, but that is just the beginning. ONAP is already starting to look into automation and SDN control of microwave and optical networks, paving the way for other operators to deploy ONAP in their use cases.

You can watch the entire webinar here:

by PANTHEON.tech | Leave us your feedback on this post!

You can contact us here!

Explore our PANTHEON.tech GitHub.

Watch our YouTube Channel.

We Are 20 Years Old

/in Blog /by PANTHEON.techIn 2001, a friend asked me if I would like to start a company with him. Word got out, so in the end, we were four – a bunch of acquaintances who didn’t exactly know what they were doing. Among other activities, we did not have time for the company and this was fully reflected in the first financial statements.

In 2004, after graduating from university, I turned my attention back to the company. I had the ambition to run it and over time we agreed to rewrite all shares to me and my sister. It was then, that Pantheon became a FAMILY COMPANY.

In addition to solving common system integrations, we started by selling used hardware – our goal was retail. At that time, however, prices and margins began to fall sharply, so we switched to wholesale. We exported to the Middle East, Asia, or Africa. We gradually added ATMs, that left Slovakia for Hong Kong and Turkey.

I enjoyed this job. Pantheon had about 5 employees at the time. However, margins continued to decline and there was an opportunity to focus on software development. I did not say no to the offer – there was significantly more money in this area. At the time, I didn’t even think about whether I was going to “build a big business” or where I want to be in 20 years. It is very fashionable today to talk about it this way, but in reality, I would be lying.

In 2009, we signed our first contract in Silicon Valley. We were a good group, who enjoyed their jobs. In 2011 we opened our first office in the US and in 2013, we started to expand to Banská Bystrica & Žilina.

Thanks to the growth, we were able to gain new experience and transform it into our own product portfolio in the field of network technologies, such as lighty.io, CDNF.io, SandWork, and EntGuard. We also managed to create a comprehensive HR system, Chronos, which facilitates the management of all personnel processes, including records and attendance management of all employees.

We had more people at the peak than we could manage. It’s time to cut back a bit and make quality a top priority. Even today, we are still working on clearly communicating to employees, what work environment we support through our expectations and their benefits.

This year, we celebrate 20 years since our inception. During that time, we repaid our debts and took the opportunity to grow. We learned that growth is not directly proportional to the quality and we prefer to stay smaller, but more valuable for us and our clients. We have found that we will always look for and prefer people who are not afraid of challenges and self-reflection.

I am proud of our journey until now and I look forward to everything that comes. Mainly from the product point of view.

Tomáš Jančo, CEO