OpenDaylight Project Celebrates 10 Years (of Active Development)

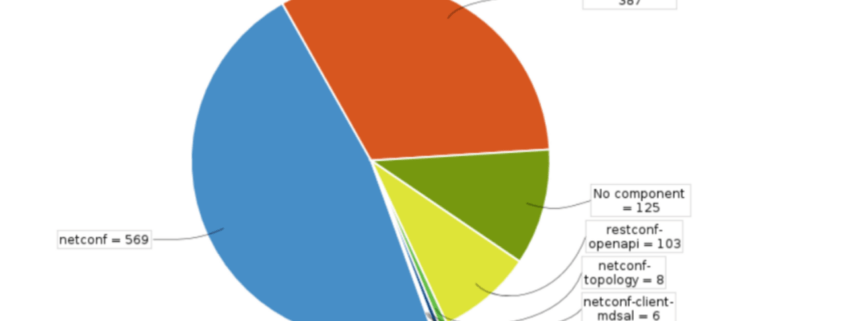

/in News, OpenDaylight /by Pantheon.techThe OpenDaylight Project is an open-source software-defined networking (SDN) controller platform that has been in active development for 10 years. Since its launch in 2013, the project has grown and evolved significantly, and it has become one of the most popular SDN solutions used by companies worldwide.

Over the years, the OpenDaylight Project has seen several major changes and developments. In the early days, the project focused on building a flexible and customizable platform for SDN controllers. The platform was designed to be vendor-neutral, so it could support a variety of hardware and software solutions, including switches, routers, and firewalls.

As the project grew in popularity, it attracted a vibrant and active community of developers, users, and contributors. The community has been instrumental in shaping the direction of the project, and it has contributed to a variety of new features and functionality.

One of the major developments in recent years has been the adoption of a more modular architecture. The platform is now broken down into several different components, each of which can be customized and extended to meet the needs of different users and applications. This modular architecture has made the OpenDaylight Project even more flexible and adaptable, and it has helped to cement its position as a leading SDN controller platform.

Another important development has been the focus on performance and scalability. The OpenDaylight Project has been optimized to handle large-scale networks, and it can easily scale to support hundreds of thousands of network elements. This has made it an attractive solution for large enterprises and service providers that need to manage complex networks.

In addition to these technical developments, the OpenDaylight Project has also seen significant growth in its ecosystem. In its lifetime companies like Cisco, Huawei, and Red Hat have contributed to this project the most, however for over the past few years the streams shifted a little and we are proud to share that PANTHEON.tech has become one of the largest contributors to the OpenDaylight project, followed by companies such as Verizon, Orange, and Rakuten.

This diverse ecosystem has helped to ensure that the project remains vendor-neutral and that it can support a wide range of hardware and software solutions.

OpenDaylight has proven over time to be one of the most production-ready and feature-rich controller platforms, used by many companies and communities like ONAP, PANTHEON.tech, Fujitsu, Infinera, Elisa Polestar. In PANTHEON.tech we fast-track our long-term OpenDaylight expertise with our valuable customers and we are delivering all the feedback and code back to the OpenDaylight community.

Looking to the future, the OpenDaylight Project shows no signs of slowing down. The community continues to develop new features and functionalities, and it remains one of the most active and vibrant communities in the open-source networking world. With its modular architecture, performance, and scalability, the OpenDaylight Project is well-positioned to continue to be a leading SDN controller platform for years to come.

by the PANTHEON.tech Team | Leave us your feedback on this post!

You can contact us here!

Explore our PANTHEON.tech GitHub.

Watch our YouTube Channel.

PANTHEON.tech at the OCP Regional Summit Prague 2023

/in News /by Pantheon.techWe are proud to announce that our Chief Product Officer, Miroslav Miklus, will be speaking at the upcoming OCP Regional Summit 2023 in Prague already in May!

Don’t miss out and come see him speaking on the SONiC Enterprise Features topic, where he ‘ll share his expertise & insights and engage in discussions with fellow industry leaders on the latest trends and innovations.

by the PANTHEON.tech Team | Leave us your feedback on this post!

You can contact us here!

Explore our PANTHEON.tech GitHub.

Watch our YouTube Channel.

SONiC Enterprise Data Center Orchestration

/in Blog, News /by Pantheon.techSandWork is now Generally Available

SONiC (Software for Open Networking in the Cloud) is a popular open-source network operating system that has become increasingly popular in recent years. This powerful and flexible platform is used by hyperscalers, large enterprises and cloud providers to build their data centers’ networks.

SONiC was originally developed as a network operating system for large-scale data center environments. It is an open-source project, originally developed by Microsoft and is now gaining popularity in recent years due to its flexibility, scalability, and extensibility with a lot of contributions from many large organizations and individual contributors.

Another key benefit of SONiC is its support for multi-vendor environments. Unlike many proprietary network operating systems, SONiC is vendor-agnostic and can be used with a wide range of network hardware from different manufacturers. This enables enterprises to choose the best network hardware for their needs, without being tied to a single vendor’s solutions.

In addition, SONiC is highly secure, with built-in support for secure communication, role-based access control, and other security features. This makes it an ideal platform for enterprises that require robust security measures to protect their data center infrastructure.

To maximize and build upon the benefits of SONiC in the Data Centre, PANTHEON.tech have leveraged their open source heritage and experience in Network automation and Software Defined Networking to develop SandWork, which is now generally available.

One of the key benefits of SandWork is its ability to orchestrate network infrastructure in the enterprise data center. SandWork provides a programmable framework and intuitive user interface that enables network administrators to automate the configuration and management of datacenter network devices. This significantly reduces the time and effort required to manage the data center network fabric, while also increasing efficiency and scalability.

SandWork uses a modular design that enables administrators to customize the platform to meet their specific needs. It provides a rich set of APIs and tools that enable developers to build their own applications and scripts to automate network management tasks. This approach makes SandWork highly extensible and adaptable, making it an ideal solution for complex and dynamic data center environments.

SandWork is also highly scalable, supporting large-scale data center environments with thousands of network devices. It provides advanced features for managing network infrastructure. This makes it easier for network administrators to identify and troubleshoot issues in the data center, ensuring maximum uptime and availability.

Overall, SandWork is a powerful and flexible platform for managing and orchestrating enterprise data centers. With its advanced features for network management and security, SandWork is a valuable tool for any organization looking to optimize their data center operations.

by the PANTHEON.tech Team | Leave us your feedback on this post!

You can contact us here!

Explore our PANTHEON.tech GitHub.

Watch our YouTube Channel.

[Video] Open source software in the world of networking

/in News /by Pantheon.techWe are proud that our PANTHEON.tech fellow, Robert Varga, has been keeping the status of major code contributor to the OpenDaylight Project for several years now. He took part in building the architecture of these open source projects from the very beginnings and shares some of his insights and expertise in an interview that took place at the Developer and Tester Forum organized by The Linux Foundation Networking.

Find the video below and let us know what you think!

[Video] The Future of OpenDaylight scalability

/in News, OpenDaylight /by Pantheon.techNovember 6, 2022, Seattle, Washington, USA. PANTHEON.tech took part in the event called ONE SUMMIT 2022, hosted by the Linux Foundation.

PANTHEON Fellow, Robert Varga, introduced the audience to The Future of OpenDaylight Scalability.

For those who missed it and could not attend the conference, we bring you great news – you can now watch the video and find out what evolutionary challenges the future brings for the OpenDaylight community!

Find the video below.

by the PANTHEON.tech Team | Leave us your feedback on this post!

You can contact us here!

Explore our PANTHEON.tech GitHub.

Watch our YouTube Channel.

[Video] SONiC & OpenDaylight integration

/in News /by Pantheon.techNovember 5, 2022, Seattle, Washington, USA. PANTHEON.tech Team participated in ONE SUMMIT 2022, hosted by the Linux Foundation.

Our speaker, Miroslav Mikluš, Chief Product Officer, gave a speech on the integration of SONiC and OpenDaylight.

For those who missed it and could not attend the conference, we bring you great news – you can now watch the video and learn more about how this integration works as well as you can gain a little deeper insight on how we build our own platform on these principles!

Find the video below.

by the PANTHEON.tech Team | Leave us your feedback on this post!

You can contact us here!

Explore our PANTHEON.tech GitHub.

Watch our YouTube Channel.

[Release] lighty.io December releases are on fire!

/in News, OpenDaylight /by Pantheon.techHave you wondered whether there was anything happening behind the lighty.io curtain recently? Of course there was!

Our team has been very diligently working on improving our portfolio. There have been released various new versions of the open-source project lighty.io, which is our variant of the well known OpenDaylight project.

PANTHEON.tech belongs to the largest contributors to OpenDaylight and therefore we are proud to be able to implement the newest improvements as soon as possible.

Continue reading to find out what’s new on the block.

1. lighty.io YANG Validator 17.0.1

This release was created for our customer who happened to crush into some bugs and made us aware. Our experts fixed the bugs by updating to yangtools version 9.0.5 and reworking on how the tree is traversed.

Cleanups were made, next development phases were prepared and other changes that were executed, you can read all about in the change log!

2. lighty.io 16.3.0

In order to remain compatible with the OpenDaylight Sulfur SR3 release, we had to come up with a new release of lighty.io 16.3.0. Interested in what has changed? Find out here!

3. lighty.io NETCONF Simulator 16.3.0

As we know, all good thing come in three, yes, lighty.io NETCONF Simulator has also successfully went through a little update to 16.3.0 and this was marked down in the repository.

4. lighty.io YANG Validator 16.3.0

However, 4 times is also a miracle and 16.3.0 comes up updated as a whole. Our experts managed to release also a new version of lighty.io YANG Validator 16.3.0 in which we keep being compatible with the OpenDaylight Sulfur SR3 version.

You can read about what’s new you at our github webpage following the red button below.

More articles you might be interested in? Lets see…

lighty.io NETCONF Simulator

Create, validate and visualize the YANG data model of application, without the need to call any other external tool – just by using the lighty.io framework.

If you need to validate YANG files within JetBrains, we have got you covered as well. Check out YANGINATOR by PANTHEON.tech!

lighty.io NETCONF Simulator

Need to simulate hundreds of NETCONF devices for development purposes? Look no further! With lighty.io NETCONF Simulator based on lighty.io 17 core, you are able to simulate any NETCONF device and test its compatibility with your use-case.

by the PANTHEON.tech Team | Leave us your feedback on this post!

You can contact us here!

Explore our PANTHEON.tech GitHub.

Watch our YouTube Channel.

The Future of OpenDaylight Scalability

/in News, OpenDaylight /by Pantheon.techThe OpenDaylight community naturally ought to think of the OpenDaylight (ODL) evolution which is necessary in order to keep up with the needs of new use cases that are currently higher than the ODL is currently offering. We at PANTHEON.tech are proud to be one of the largest contributors to the ODL source-code, therefore we realize the importance of our keeping pace with the demands of our clients so they are given the opportunity to effectively use the ODL in the dynamic environments.

Now there are several outlined points PANTHEON.tech has gathered that need to undergo the evolution process – architecture, YANG space management, request router and deployment. We consider these as waiting at our doorstep for us to incorporate them into practical solutions and use cases, which then can reflect in the trends yet to come, such as IoT, Service Orchestrating, Edge Computing, Transport PCE / network optimization, RAN & 5G and hierarchical controllers and many others.

Got you interested? Our Pantheon Fellow, Robert Varga, is about to tell you much more about this topic at the upcoming ONE SUMMIT 2022, an event hosted by the Linux Foundation taking part in Seattle, Washington, next week. His presentation The Future of OpenDaylight Scalability is scheduled on Wednesday, November 16th at 3:40pm (PST) at the Sheraton Grand Seattle hotel.

So make sure you don’t miss out – click here for registration.

by the PANTHEON.tech Team | Leave us your feedback on this post!

You can contact us here!

Explore our PANTHEON.tech GitHub.

Watch our YouTube Channel.

SONiC Data Center automation w/ ODL integration

/in News, OpenDaylight /by Pantheon.techSoftware for Open Networking in the Cloud, also known as SONiC, is an open source network operating system which has been recently given over to the Linux Foundation. This contribution will accelerate adoption of open-networking based white-box devices.

Our team at PANTHEON.tech has been focusing on increasing SONiC’s usability whilst simultaneously contributing to other Linux Foundation projects – like OpenDaylight. We are performing given activities and contributions in order to aim for an achievement in a form of combination of these two powerful projects as well as the orchestration of SONiC based network environments. As PANTHEON.tech has been building its own orchestration platform based on these principles, we consider the model-driven integration between SONiC and OpenDaylight crucial to our growing portfolio.

Miroslav Mikluš, the Chief Product Officer at PANTHEON.tech, is about to give a brief overview of this integration at the upcoming event hosted by the Linux Foundation, ONE SUMMIT 2022 in Seattle, Washington. You can meet him and attend to his presentation SONiC Data Center Automation W/ OpenDaylight next week on Tuesday Nov 15th 2022, at 4pm (PST) at the Sheraton Grand Seattle hotel.

So make sure you don’t miss out – click here for registration.

by the PANTHEON.tech Team | Leave us your feedback on this post!

You can contact us here!

Explore our PANTHEON.tech GitHub.

Watch our YouTube Channel.

PANTHEON.tech becomes a Cloud-Native Computing Foundation (CNCF) Member

/in News /by Pantheon.techTo support and enhance our open source Cloud Native Function development and goals, PANTHEON.tech is extremely pleased to announce our membership of the Cloud-Native Computing Foundation (CNCF).

PANTHEON.tech is delighted to be part of CNCF’s charter to “make cloud native computing ubiquitous” showing how large and small organizations can benefit – Miroslav Mikluš, CPO of PANTHEON.tech

Further, to our membership, PANTHEON.tech is also delighted to highlight the first two of our applications to have earned CNCF Certified status, namely two apps from our open source network automation platform, lighty.io – RNC & RCgNMI.

As one of the leading Linux Foundation Networking projects contributors, we developed lighty.io as PANTHEON.tech’s enhanced OpenDaylight SDN software platform, which offers significant benefits in performance, agility and availability. For simpler deployment, we provide a portfolio of lighty.io integrated apps:

- The lighty.io RESTCONF-NETCONF (RNC) application allows simpler initialization and utilization of the most used OpenDaylight services and optional add-on custom business logic.

- The lighty.io RESTCONF-gNMI (RCgNMI) application allows for intuitive configuration of gNMI devices. The app primarily increases the capabilities of lighty.io for different implementations and solutions, which include gNMI device configuration.

CNCF is an open-source, vendor-neutral hub for cloud-native computing, bringing together organizations e.g. MSP/CSPs and Enterprises with experienced and expert CNF providers. Hence, our membership underlines PANTHEON.tech’s goals for recognition of open source software performance, availability, and scale through certification.

PANTHEON.tech is among the first adopters of the CNF Certified certification, issued by the Cloud-Native Computing Foundation. Others include F5, Juniper Networks, and MATRIXX Software.

“I am thrilled to announce that lighty.io, a lightweight OpenDaylight distribution, has passed the CNF Certification program, and we can declare OpenDaylight as cloud native ready” said Miroslav Mikluš, CPO of PANTHEON.tech.

If you would like to hear more on our Cloud Native development work, services and products, please don’t hesitate to contact us.

[Release] lighty.io 17

/in News, SDN /by Pantheon.techReady for an upgrade?

Even though nights are getting longer, our experts ain’t getting any sleepier – let us introduce you to the newest release of lighty.io – 17!

PANTHEON.tech has been one of the largest contributors to the OpenDaylight code for several years – hence we were eager to implement the latest changes from the Chlorine SR2 release.

Come have a look at what we have achieved and how we have been doing since the launch of the previous version.

Certified CNF

Starting with the latest and greatest news – two of our lighty.io apps:

have been officially certified as Certified CNFs, issued by the Cloud-Native Computing Foundation.

New Features

Every major release deserves some new features to go along with it. lighty.io 17 is not far behind and introduces a couple of them:

- Keeping up with OpenDaylight Chlorine SR2 release

- Open-source the lighty-guice-app example

- Our team has open-sourced a previously unavailable example, which allows lighty.io to work with the Google Guice framework, based on Java

- New lighty.io RNC app cluster GitHub workflow test

- Read more about clustering in the lighty.io RNC app here

- Add configuration for basic authorization to AAA

- Our Authentication, Authorization & Accounting module has a new configuration for basic authorization

- Reusable workflow for lighty.io App test jobs

- Replace reload4j with log4j2

Improvements & Security Fixes

Among the new features are improvements and security fixes, which our team was working on to make the lighty.io experience better, faster and safer for everyone to use:

- Replaced javax with jakarta

- Changed ODL compatibility release in documentation

- Fix reported CVE-2020-36518 & CVE-2022-2048 issues

- Fixed the vulnerabilities reported from previous version

Learn about other lighty.io tools below

We have already mentioned the 2 of our apps above (lighty.io RESTCONF-NETCONF App & lighty.io gNMI App). But that’s not the end! Let us introduce you to some more apps:

lighty.io YANG Validator

By using the lighty.io framework, our customers are able to create, validate and visualize the YANG data models of their application.

lighty.io NETCONF Simulator

Need to simulate hundreds of NETCONF devices for development purposes? Look no further! With lighty.io NETCONF Simulator based on lighty.io 17 core, you are able to simulate any NETCONF device and test its compatibility with your use-case.

[Release] And generally speaking of releases, there has been a new version of lighty.io NETCONF Simulator 17 released too! Click here for all the delivered changes.

by lighty.io Team | Leave us your feedback on this post!

Explore our PANTHEON.tech GitHub.

Watch our YouTube Channel.

[Release] lighty.io 15.1 – All Hands on Kubernetes!

/in News /by PANTHEON.techThe PANTHEON.tech team working on lighty.io has been busy working on the newest release, corresponding with OpenDaylight Phosphorus SR1.

PANTHEON.tech being the largest contributor to the OpenDaylight Phosphorus release, was eager to implement the improvements of OpenDaylight’s first service release as soon as possible!

Let’s have a look at what else is new in lighty.io 15.1!

[Improvements] Updates & Additions

Several improvements were made to the lighty.io 15.1 release. A Maven profile was added to collect & list licenses for dependencies used in lighty.io – this makes it easier to correctly address licenses for the variety of dependencies in lighty.io.

Speaking of dependencies – upstream dependencies were updated to correspond with the OpenDaylight Phosphorus SR1 release versions:

-

- odlparent 9.0.8

- aaa-artifacts 0.14.7

- controller-artifacts 4.0.7

- bundle-parent 4.0.7

- infrautils-artifacts 2.0.8

- mdsal-artifacts 8.0.7

- netconf-artifacts 2.0.9

- yangtools-artifacts 7.0.9

- yang-maven-plugin 7.0.9

- mdsal-binding-java-api-generator 8.0.7

- checkstyle 9.0.8

[lighty.io Apps] Available in Kubernetes!

Most of our standalone apps are available for K8s deployment, including Helm charts!

lighty.io RESTCONF-NETCONF Application

An out-of-the-box pre-packaged microservice-ready application you can easily use for managing network elements in your SDN use case. Learn more here:

lighty.io gNMI RESTCONF Application

An easily deployable tool for manipulating gNMI devices – and it’s open-source! Imagine CRUD operation on multiple gNMI devices, managed by one application. Learn more here:

[Reminder] Other wonderful lighty.io apps!

lighty.io NETCONF Simulator

Simulate hundreds to thousands of NETCONF devices within your development or CI/CD – for free and of course, open-source. Learn more here:

lighty.io YANG Validator

Create, validate and visualize the YANG data model of their application, without the need to call any other external tool – just by using the lighty.io framework.

If you need to validate YANG files within JetBrains, we have got you covered as well. Check out YANGINATOR by PANTHEON.tech!

Give lighty.io 15.1 a try and let us know what you think!

by the lighty.io Team| Leave us your feedback on this post!

You can contact us here!

Explore our PANTHEON.tech GitHub.

Watch our YouTube Channel.

[Release] lighty.io 14

/in News, OpenDaylight, SDN /by PANTHEON.techBuilding an SDN controller is easy with lighty.io!

Learn why enterprises rely on lighty.io for their network solutions.

What changed in lighty.io 14?

lighty.io 14 maintains compatibility with the OpenDaylight Silicon release.

lighty.io RNC

The RESTCONF-NETCONF Controller Application (lighty.io RNC) is part of this release, go check out the in-depth post on this wonderful application!

We added a Helm chart to lighty.io RNC, for an easily configurable Kubernetes deployment.

Features & Improvements

Corrected usage of the open-source JDK 11 in three examples:

- LGTM Build

- lighty.io OpenFlow w/ RESTCONF (Docker file)

- lighty.io w/ SpringBoot Integration

We have migrated from testing & deploying using Travis CI to GitHub Actions! This switch makes for easier integration of forked repositories with GitHub Actions & SonarCloud, while uploading & storing build artifacts in GitHub was also made possible – yay!

As for GitHub Workflow, we added Helm & Docker publishing, as well as the ability to specify checkout references in the workflow itself.

lighty.io Examples received smoke tests (also called confidence or sanity testing), which will point out severe and simple failures. JSON sample configurations also received updates.

We understood, that time tracking logs were hard to read – so we made them more readable!

We took all the necessary functionality from lighty-codecs artifact, and replaced it with the introduction of lighty-codecs-util.

You can spot an updated README file for the Controller, while we added initial configuration data functionality to it as well.

Updates to Upstream Dependencies

Updated upstream dependencies to the latest OpenDaylight Silicon versions:

- odlparent 8.1.1

- aaa-artifacts 0.13.2

- controller-artifacts 3.0.7

- infrautils-artifacts 1.9.6

- mdsal-artifacts 7.0.6

- mdsal-model-artifacts 0.13.3

- netconf-artifacts 1.13.1

- yangtools-artifacts 6.0.5

- openflowplugin-artifacts 0.12.0

- serviceutils-artifacts 0.7.0

lighty.io 13.3

For compatibility with OpenDaylight Aluminium, we have also released the 13.3 version of lighty.io!

The release received a Docker Publish workflow, the ability to specify checkout references in a workflow, and Helm publishing workflow as well.

You can find all changes in the lighty.io 13.3 release in the changelog, here.

by Michal Baník & Samuel Kontriš | Subscribe to our newsletter!

Explore our PANTHEON.tech GitHub.

Watch our YouTube Channel.

[OpenDaylight] Migrating to AKKA 2.6.X

/in News, OpenDaylight /by PANTHEON.techPANTHEON.tech has enabled OpenDaylight to migrate to the current version of AKKA, 2.6.x. Today, we will review recent changes to AKKA, which is the heart of OpenDaylight’s Clustering functionality.

As the largest committer to the OpenDaylight source-code, PANTHEON.tech will regularly keep you updated and posted about our efforts in projects surrounding OpenDaylight.

Released in November 2019, added many improvements, including better documentation, and introduced a lot of new features. In case you are new to AKKA, here is a description from the official website:

[It is] a set of open-source libraries for designing scalable, resilient systems that span processor cores and networks. [AKKA] allows you to focus on meeting business needs instead of writing low-level code to provide reliable behavior, fault tolerance, and high performance.

OpenDaylight Migrates to AKKA 2.6

The most important features of AKKA 2.6 are shortly described below; for the full list, please see the release notes.

Make sure to check out the AKKA Migration Guide 2.5.x to 2.6.x, and the PANTHEON.tech OpenDaylight page!

AKKA Typed

AKKA Typed is the new typed Actor API. It was declared ready for production use since AKKA 2.5.22, and since 2.6.0 it’s the officially recommended Actor API for new projects. Also, the documentation was significantly improved.

In a typed API, each actor declares an acceptable message type, and only messages of this type can be sent to the actor. This is enforced by the system.

For untyped extensions, seamless access is allowed.

The classic APIs for modules, such as Persistence, Cluster Sharding and Distributed Data, are still fully supported, so the existing applications can continue to use those.

Artery

Artery is a reimplementation of the old remoting module, aimed at improving performance and stability, based on Aeron (UDP) and AKKA Streams TCP/TLS, instead of Netty TCP.

Artery provides more stability and better performance for systems using AKKA Cluster. Classic remoting is deprecated but still can be used. More information is provided in the migration guide.

Jackson-Based Serialization

AKKA 2.6 includes a new Jackson-based serializer, supporting both JSON and CBOR formats. This is the recommended serializer for applications using AKKA 2.6. Java serialization is disabled by default.

Distributed Publish-Subscribe

With AKKA Typed, actors on any node in the cluster can subscribe to specific topics. The message published to one of these topics will be delivered to all subscribed actors. This feature also works in a non-cluster setting.

Passivation in Cluster

With the Cluster passivation feature, you may stop persistent entities that are not used to reduce memory consumption by defining a timeout for message receiving. Passivation timeout can be pre-configured in cluster settings or set explicitly for an entity.

Cluster: External shard allocation

A possibility to use a new alternative external shard allocation strategy is provided, which allows explicit control over the allocation of shards. This covers use cases such as Kafka Partition consumption matching with shard locations to avoid network hops.

Sharded Daemon Process

Sharded Daemon Process allows specific actors in a cluster, to keep it alive and balanced. For rebalancing, an actor can be stopped and then started on a new node. This feature can be useful in case the data processing workload needs to be split across a set number of workers.

by Konstantin Blagov | Leave us your feedback on this post!

You can contact us at https://pantheon.tech/

Explore our Pantheon GitHub.

Watch our YouTube Channel.

[Thoughts] On Karaf & Its Future

/in Blog, OpenDaylight /by PANTHEON.techThese thoughts were originally sent on the public karaf-dev mailing list, where Robert Varga wrote a compelling opinion on what the future holds for Karaf and where its currently is headed. The text below was slightly edited from the original.

With my various OpenDaylight hats on, let me summarize our project-wide view, with a history going back to the project that was officially announced (early 2013).

From the get-go, our architectural requirement for OpenDaylight was OSGi compatibility. This means every single production (not maven-plugin obviously) artifact has to be a proper bundle.

This highly-technical and implementation-specific requirement was set down because of two things:

- What OSGi brings to MANIFEST.MF in terms of headers and intended wiring, incl. Private-Package

- Typical OSGi implementation (we inherited Equinox and are still using it) uses multiple class loaders and utterly breaks on split packages

This serves as an architectural requirement that translates to an unbreakable design requirement of how the code must be structured.

We started up with a home-brew OSGi container. We quickly replaced it for Karaf 3.0.x (6?), massively enjoying it being properly integrated, with shell, management, and all that. Also, feature:install.

At the end of the day, though, OpenDaylight is a toolkit of a bunch of components that you throw together and they work.

Our initial thinking was far removed from the current world of containers when operations go. The deployment was envisioned more like an NMS with a dedicated admin team (to paint a picture), providing a flexible platform.

The world has changed a lot, and the focus nowadays is on containers providing a single, hard-wired use-case.

We now provide out-of-the-box use-case wiring. using both dynamic Karaf and Guice (at least for one use case). We have an external project which shows the same can be done with pure Java, Spring Boot, and Quarkus.

We now also require Java 11, hence we have JPMS – and it can fulfill our architectural requirement just as well as OSGi. Thanks to OSGi, we have zero split packages.

We do not expect to ditch Karaf anytime soon, but rather leverage static-framework for a light-weight OSGi environment, as that is clearly the best option for us short-to-medium term, and definitely something we will continue supporting for the foreseeable future.

The shift to nimble single-purpose wirings is not going away and hence we will be expanding there anyway.

To achieve that, we will not be looking for a framework-of-frameworks, we will do that through native integration ourselves.

If Karaf can do the same, i.e. have its general-purpose pieces available as components, easily thrown together with @Singletons or @Components, with multiple frameworks, as well as nicely jlinkable – now that would be something.

[Release] lighty.io 13

/in News /by PANTHEON.techWith enterprises already deploying lighty.io in their networks, what are you waiting for? Check out the official lighty.io website, as well as references.

13 is an unlucky number in some cultures – but not in the case of the 13th release of lighty.io!

What’s new in lighty.io 13?

PANTHEON.tech has released lighty.io 13, which is keeping up-to-date with OpenDaylight’s Aluminium release. A lot of major changes happened to lighty.io itself, which we will break-down for you here:

- ODL Parent 7.0.5

- MD-SAL 6.0.4

- YANG Tools 5.0.5

- AAA 0.12.0

- NETCONF 1.9.0

- OpenFlow 0.11.0

- ServiceUtils 0.6.0

- Maven SAL API Gen Plugin 6.0.4

Our team managed to fix start-scripts for examples in the repository, as well as bump the Maven Compiler Plugin & Maven JAR Plugin, for compiling and building JARs respectively. Fixes include Coverity issues & refactoring code, in order to comply with a source-quality profile (the source-quality profile was also enabled in this release). Furthermore, we have fixed the NETCONF delete-config preconditions, so they work as intended in RFC 6241.

As for improvements, we have reworked disabled tests and managed to improve AAA (Authentication, Authorization, Accounting) tests. Checkstyle was updated to the 8.34 version.

Since we are managing compatibility with OpenDaylight Aluminium, it is worth noting the several accomplishments of the 13th release of OpenDaylight as well.

The largest new features are the support of incremental data recovery & support of L4Z compression. L4Z offers lossless compression with speeds up to 500 MB/s (per core), which is quite impressive and can be utilized within OpenDaylight as well!

Incremental Data Recovery allows for datastore journal recovery and increased compression of datastore snapshots – which is where L4Z support comes to the rescue!

Another major feature, if you remember, is the PANTHEON.tech initiative towards OpenAPI 3.0 support in OpenDaylight. Formerly known as Swagger, OpenAPI helps visualize API resources while giving the user the possibility to interact with them.

What is lighty.io?

Remember, lighty.io is an OpenDaylight feature, which enables us to run its core features without Karaf, while working with any available Java platform. Contact us today for a demo or custom integration!

[What Is] XDP/AF_XDP and its potential

/in News /by PANTHEON.techWhat is XDP?

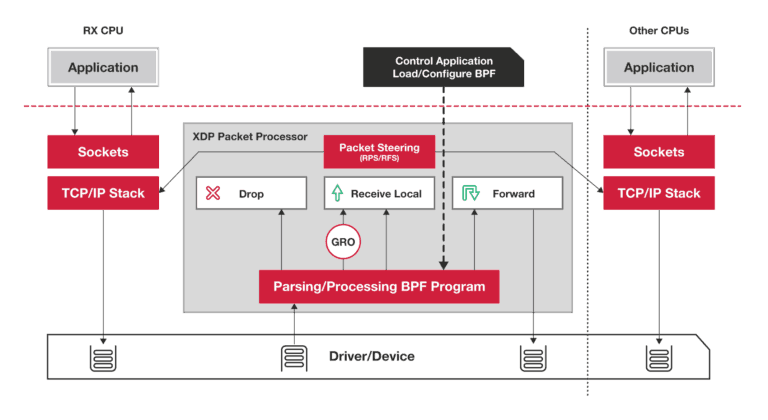

XDP (eXpress Data Path) is an eBPF (extended Berkeley Packet Filter) implementation for early packet interception. Received packets are not sent to kernel IP stack directly, but can be sent to userspace for processing. Users may decide what to do with the packet (drop, send back, modify, pass to the kernel). A detailed description can be found here.

XDP is designed as an alternative to DPDK. It is slower and at the moment, less mature than DPDK. However, it offers features/mechanisms already implemented in the kernel (DPDK users have to implement everything in userspace).

At the moment, XDP is under heavy development and features may change with each kernel version. There comes the first requirement – to run the latest kernel version. Changes between the kernel version may not be compatible.

IO Visor description of XDP packet processing

XDP Attachment

The XDP program can be attached to an interface and can process the RX queue of that interface (incoming packets). It is not possible to intercept the TX queue (outgoing packets), but kernel developers are continuously extending the XDP feature-set. TX queue is one of the improvements with high interest from the community.

XDP program can be loaded in several modes:

- Generic – Driver doesn’t have support for XDP, but the kernel fakes it. XDP program works, but there’s no real performance benefit because packets are handed to kernel stack anyways which then emulates XDP – this is usually supported with generic network drivers used in home computers, laptops, and virtualized HW.

- Native – Driver has XDP support and can hand then to XDP without kernel stack interaction – Few drivers can support it and those are usually for enterprise HW

- Offloaded – XDP can be loaded and executed directly on the NIC – just a handful of NICs can do that

XDP runs in an emulated environment. There are multiple constraints implied, which should protect the kernel from errors in the XDP code. There is a limit on how many instructions one XDP can receive. However, there is a workaround in the Call Table, referencing various XDP programs that can call each other.

The XDP emulator checks the range of used variables. Sometimes it’s helpful – it doesn’t allow you to access packet offset higher than already validated by packet size.

Sometimes it is annoying because the packet pointer can be passed to a subroutine, where access may fail with out of bounds access even if the original packet was already checked for that size.

BPF Compilation

Errors reported by the BPF compiler are quite tricky, due to the program ID compiled into byte code. Errors reported by that byte code usually do not make it obvious, which C program part it is related to.

The error message is sometimes hidden at the beginning of the dump, sometimes at the end of the dump. The instruction dump itself may be many pages long. Sometimes, the only way how to identify the issue is to comment out parts of the code, to figure out which line introduced it.

XDP can’t (as of November 2019):

One of the requirements was to forward traffic between host and namespaces, containers or VMs. Namespaces do the job properly so, XDP can access either host interfaces or namespaced interfaces. I wasn’t able to use it as a tunnel to pass traffic between them. The workaround is to use a veth pair to connect the host with a namespace and attach 2 XDP handlers (one on each side to process traffic). I’m not sure, whether they can share TABLES to pass data. However, using the veth pair mitigates the performance benefit of using XDP.

Another option is to create AF_XDP socket as a sink for packets received in the physical interface and processed by the attached XDP. But there are 2 major limitations:

- One cannot create dozens of AF_XDP sockets and use XDP to redirects various traffic classes into own AF_XDP for processing because each AF_XDP socket binds to the TX queue of physical interfaces. Most of the physical and emulated HW supports only a single RX/TX queue per interface. If there’s one AF_XDP already bound, another one will fail. There are few combinations of HW and drivers, which support multiple RX/TX queues but they have 2/4/8 queues, which doesn’t scale with hundreds of containers running in the cloud.

- Another limitation is, that XDP can forward traffic to an AF_XDP socket, where the client reads the data. But when the client writes something to AF_XDP, the traffic is going out immediately via the physical interface and XDP cannot see it. Therefore, XDP + AF_XDP is not viable for symmetric operation like encapsulation/decapsulation. Using a veth pair may mitigate this issue.

XDP can (as of November 2019):

- Fast incoming packet filtering. XDP can inspect fields in incoming packets and take simple action like DROP, TX to send it out the same interface it was received, REDIRECT to other interface or PASS to kernel stack for processing. XDP can alternate packet data like swap MAC addresses, change ip addresses, ports, ICMP type, recalculate checksums, etc. So obvious usage is for implementing:

- Filerwalls (DROP)

- L2/L3 lookup & forward

- NAT – it is possible to implement static NAT indirectly (two XDP programs, each attached to own interface, processing and forwarding the traffic out, via the other interface). Connection tracking is possible, but more complicated with preserving and exchanging session-related data in TABLES.

by Marek Závodský, PANTHEON.tech

AF_XDP

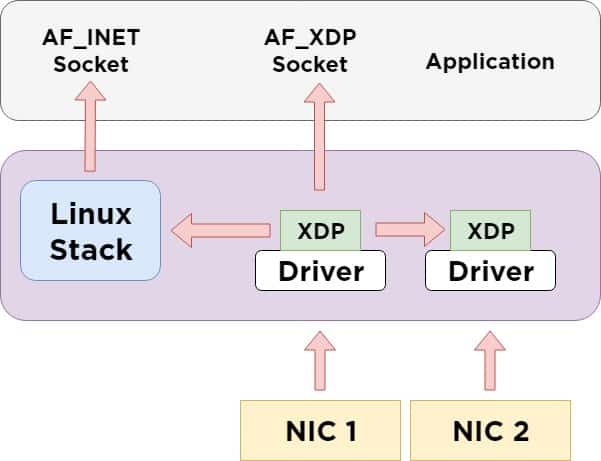

AF_XDP is a new type of socket, presented into the Linux Kernel 4.18, which does not completely bypass the kernel, but utilizes its functionality and enables to create something alike DPDK or the AF_Packet.

DPDK (Data Plane Development Kit) is a library, developed in 2010 by Intel and now under the Linux Foundation Projects umbrella, which accelerates packet processing workloads on a broad pallet of CPU architectures.

AF_Packet is a socket in the Linux Kernel, which allows applications to send & receive raw packets through the Kernel. It creates a shared mmap ring buffer between the kernel and userspace, which reduces the number of calls between these two.

At the moment XDP is under heavy development and features may change with each kernel version. There comes the first requirement, to run the latest kernel version. Changes between the kernel version may not be compatible.

As opposed to AF_Packet, AF_XDP moves frames directly to the userspace, without the need to go through the whole kernel network stack. They arrive in the shortest possible time. AF_XDP does not bypass the kernel but creates an in-kernel fast path.

It also offers advantages like zero-copy (between kernel space & userspace) or offloading of the XDP bytecode into NIC. AF_XDP can run in interrupt mode, as well as polling mode, while DPDK polling mode drivers always poll – this means that they use 100% of the available CPU processing power.

Future potential

One of the potentials in the future for an offloaded XDP (being one of the possibilities of how an XDP bytecode can be executed) is, that such an offloaded program can be executed directly in a NIC and therefore does not use any CPU power, as noted at FOSDEM 2018:

Because XDP is so low-level, the only way to move packet processing further down to earn additional performances is to involve the hardware. In fact, it is possible since kernel 4.9 to offload eBPF programs directly onto a compatible NIC, thus offering acceleration while retaining the flexibility of such programs.

Decentralization

Furthermore, all signs lead to a theoretical, decentralized architecture – with emphasis on community efforts in offloading workloads to NICs – for example in a decentralized NIC switching architecture. This type of offloading would decrease costs on various expensive tasks, such as the CPU itself having to process the incoming packets.

We are excited about the future of AF_XDP and looking forward to the mentioned possibilities!

For a more detailed description, you can download a presentation with details surrounding AF_XDP & DPDK and another from FOSDEM 2019.

Update 08/15/2020: We have upgraded this page, it’s content and information for you to enjoy!

You can contact us at https://pantheon.tech/

Explore our Pantheon GitHub.

Watch our YouTube Channel.

[Integration] Network Service Mesh & Cloud-Native Functions

/in CDNF.io, News /by PANTHEON.techby Milan Lenčo & Pavel Kotúček | Leave us your feedback on this post!

As part of a webinar, in cooperation with the Linux Foundation Networking, we have created two repositories with examples from our demonstration “Building CNFs with FD.io VPP and Network Service Mesh + VPP Traceability in Cloud-Native Deployments“:

Check out our full-webinar, in cooperation with the Linux Foundation Networking on YouTube:

What is Network Service Mesh (NSM)?

Recently, Network Service Mesh (NSM) has been drawing lots of attention in the area of network function virtualization (NFV). Inspired by Istio, Network Service Mesh maps the concept of a Service Mesh to L2/L3 payloads. It runs on top of (any) CNI and builds additional connections between Kubernetes Pods in the run-time, based on the Network Service definition deployed via CRD.

Unlike Contiv-VPP, for example, NSM is mostly controlled from within applications through the provided SDK. This approach has its pros and cons.

Pros: Gives programmers more control over the interactions between their applications and NSM

Cons: Requires a deeper understanding of the framework to get things right

Another difference is, that NSM intentionally offers only the minimalistic point-to-point connections between pods (or clients and endpoints in their terminology). Everything that can be implemented via CNFs, is left out of the framework. Even things as basic as connecting a service chain with external physical interfaces, or attaching multiple services to a common L2/L3 network, is not supported and instead left to the users (programmers) of NSM to implement.

Integration of NSM with Ligato

At PANTHEON.tech, we see the potential of NSM and decided to tackle the main drawbacks of the framework. For example, we have developed a new plugin for Ligato-based control-plane agents, that allows seamless integration of CNFs with NSM.

Instead of having to use the low-level and imperative NSM SDK, the users (not necessarily software developers) can use the standard northbound (NB) protobuf API, in order to define the connections between their applications and other network services in a declarative form. The plugin then uses NSM SDK behind the scenes to open the connections and creates corresponding interfaces that the CNF is then ready to use.

The CNF components, therefore, do not have to care about how the interfaces were created, whether it was by Contiv, via NSM SDK, or in some other way, and can simply use logical interface names for reference. This approach allows us to decouple the implementation of the network function provided by a CNF from the service networking/chaining that surrounds it.

The plugin for Ligato-NSM integration is shipped both separately, ready for import into existing Ligato-based agents, and also as a part of our NSM-Agent-VPP and NSM-Agent-Linux. The former extends the vanilla Ligato VPP-Agent with the NSM support while the latter also adds NSM support but omits all the VPP-related plugins when only Linux networking needs to be managed.

Furthermore, since most of the common network features are already provided by Ligato VPP-Agent, it is often unnecessary to do any additional programming work whatsoever to develop a new CNF. With the help of the Ligato framework and tools developed at Pantheon, achieving the desired network function is often a matter of defining network configuration in a declarative way inside one or more YAML files deployed as Kubernetes CRD instances. For examples of Ligato-based CNF deployments with NSM networking, please refer to our repository with CNF examples.

Finally, included in the repository is also a controller for K8s CRD defined to allow deploying network configuration for Ligato-based CNFs like any other Kubernetes resource defined inside YAML-formatted files. Usage examples can also be found in the repository with CNF examples.

CNF Chaining using Ligato & NSM (example from LFN Webinar)

In this example, we demonstrate the capabilities of the NSM agent – a control-plane for Cloud-native Network Functions deployed in a Kubernetes cluster. The NSM agent seamlessly integrates the Ligato framework for Linux and VPP network configuration management, together with Network Service Mesh (NSM) for separating the data plane from the control plane connectivity, between containers and external endpoints.

In the presented use-case, we simulate a scenario in which a client from a local network needs to access a web server with a public IP address. The necessary Network Address Translation (NAT) is performed in-between the client and the webserver by the high-performance VPP NAT plugin, deployed as a true CNF (Cloud-Native Network Functions) inside a container. For simplicity, the client is represented by a K8s Pod running image with cURL installed (as opposed to being an external endpoint as it would be in a real-world scenario). For the server-side, the minimalistic TestHTTPServer implemented in VPP is utilized.

In all the three Pods an instance of NSM Agent is run to communicate with the NSM manager via NSM SDK and negotiate additional network connections to connect the pods into a chain client:

Client <-> NAT-CNF <-> web-server (see diagrams below)ne

The agents then use the features of the Ligato framework to further configure Linux and VPP networking around the additional interfaces provided by NSM (e.g. routes, NAT).

The configuration to apply is described declaratively and submitted to NSM agents in a Kubernetes native way through our own Custom Resource called CNFConfiguration. The controller for this CRD (installed by cnf-crd.yaml) simply reflects the content of applied CRD instances into an ETCD datastore from which it is read by NSM agents. For example, the configuration for the NSM agent managing the central NAT CNF can be found in cnf-nat44.yaml.

More information about cloud-native tools and network functions provided by PANTHEON.tech can be found on our website CDNF.io.

Networking Diagram

Routing Diagram

Steps to recreate the Demo

Steps to recreate the Demo

-

- Clone the following repository.

- Create Kubernetes cluster; deploy CNI (network plugin) of your preference

- Install Helm version 2 (latest NSM release v0.2.0 does not support Helm v3)

- Run

helm initto install Tiller and to set up a local configuration for the Helm - Create a service account for Tiller

$ kubectl create serviceaccount --namespace kube-system tiller $ kubectl create clusterrolebinding tiller-cluster-rule --clusterrole=cluster-admin --serviceaccount=kube-system:tiller $ kubectl patch deploy --namespace kube-system tiller-deploy -p '{"spec":{"template":{"spec":{"serviceAccount":"tiller"}}}}' - Deploy NSM using Helm:

$ helm repo add nsm https://helm.nsm.dev/ $ helm install --set insecure=true nsm/nsm

- Deploy ETCD + controller for CRD, both of which will be used together to pass configuration to NSM agents:

$ kubectl apply -f cnf-crd.yaml

- Submit the definition of the network topology for this example to NSM:

$ kubectl apply -f network-service.yaml

- Deploy and start simple VPP-based webserver with NSM-Agent-VPP as control-plane:

$ kubectl apply -f webserver.yaml

- Deploy VPP-based NAT44 CNF with NSM-Agent-VPP as control-plane:

$ kubectl apply -f cnf-nat44.yaml

- Deploy Pod with NSM-Agent-Linux control-plane and curl for testing connection to the webserver through NAT44 CNF:

$ kubectl apply -f client.yaml

- Test connectivity between client and webserver:

$ kubectl exec -it client curl 80.80.80.80/show/version

- To confirm that client’s IP is indeed source NATed (from 192.168.100.10 to 80.80.80.102) before reaching the web server, one can use the VPP packet tracing:

$ kubectl exec -it webserver vppctl trace add memif-input 10 $ kubectl exec -it client curl 80.80.80.80/show/version $ kubectl exec -it webserver vppctl show trace 00:01:04:655507: memif-input memif: hw_if_index 1 next-index 4 slot: ring 0 00:01:04:655515: ethernet-input IP4: 02:fe:68:a6:6b:8c -> 02:fe:b8:e1:c8:ad 00:01:04:655519: ip4-input TCP: 80.80.80.100 -> 80.80.80.80 ...